Getting Started with Apache Airflow

1. Introduction

This is an in-depth article related to Apache Airflow.

Airflow was created in Airbnb in 2015. Airflow has 1000 contributors in the Apache Community. Apache Airflow is used for automating tasks to improve efficiency. These tasks are related to data collection, preprocessing, uploading, and reporting.

2. Apache Airflow

Apache Airflow is used as a workflow Automator and scheduler using data pipelines. The pipelines can be executed in a specified order using the appropriate resources. It has a good user interface to track and manage the workflows.

2.1 Prerequisites

Python 3.8.8 is required on windows or any operating system. Pycharm is needed for python programming.

2.2 Download

Python 3.8.8 can be downloaded from the website. Pycharm is available at this link.

2.3 Setup

2.3.1 Python Setup

To install python, the download package or executable needs to be executed.

2.3.2 Airflow Setup

You can install Apache Airflow by using the command below:

Airflow installation

pip3 install apache-airflow

2.4 Features

Apache Airflow is easy to use. It is open-source and part of the Apache community. It has integrations that can be executed on different cloud platforms. Python is the language that you can use for creating the workflows. The user interface helps in tracking the status of the workflow tasks.

2.5 Components

Apache Airflow has components which are DAG, Webserver, Metadata database, and scheduler. DAG is a directed acyclic graph and is used for displaying the task relationships. Python language is used to create a DAG. A Webserver is based on Flask. Airflow task information is stored in the database. A Scheduler is used for updating the task status in the metadata database. The data pipelines can be dynamic and configured in python code. They are extensible and you can use different operators and executors. They can be parameterized using python scripts. Airflow has a modular architecture and is scalable. It uses a message queue to communicate with a different number of workers.

2.6 Apache Airflow HelloWorld

You need to initialize the Airflow Database using the command below:

Airflow Database Setup

$ source activate airflow-tutorial $ export AIRFLOW_HOME="$(pwd)" airflow db init

Next, you can create the admin user using the command below:

Airflow Admin user creation

airflow users create --role Admin --username admin --email admin --firstname admin --lastname admin --password admin

The output for the command when executed will be as below:

Airflow Admin user creation Output

(airflow-venv) apples-MacBook-Air:apacheairflow bhagvan.kommadi$ airflow users create --role Admin --username admin --email admin --firstname admin --lastname admin --password admin

[2022-01-03 01:54:50,763] {manager.py:763} WARNING - No user yet created, use flask fab command to do it.

[2022-01-03 01:54:51,295] {manager.py:512} WARNING - Refused to delete permission view, assoc with role exists DAG Runs.can_create Admin

[2022-01-03 01:54:53,936] {manager.py:214} INFO - Added user admin

User "admin" created with role "Admin"

You can now write the hello world dag using the code below.

Airflow HelloWorld

from datetime import datetime

from airflow import DAG

from airflow.operators.dummy_operator import DummyOperator

from airflow.operators.python_operator import PythonOperator

def print_hello_world():

return 'Hello world!'

dag = DAG('hello_world', description='Hello World DAG',

schedule_interval='0 12 * * *',

start_date=datetime(2022, 1, 6), catchup=False)

dummy_operator = DummyOperator(task_id='dummy_task', retries=3, dag=dag)

hello_world_operator = PythonOperator(task_id='hello_world_task', python_callable=print_hello_world, dag=dag)

dummy_operator >> hello_world_operator

After placing the python file in the dags folder of Airflow Home. You can use the command below:

Airflow WebServer

airflow webserver -p 8081

You need to start the Airflow scheduler using the command below:

Airflow Scheduler

airflow scheduler

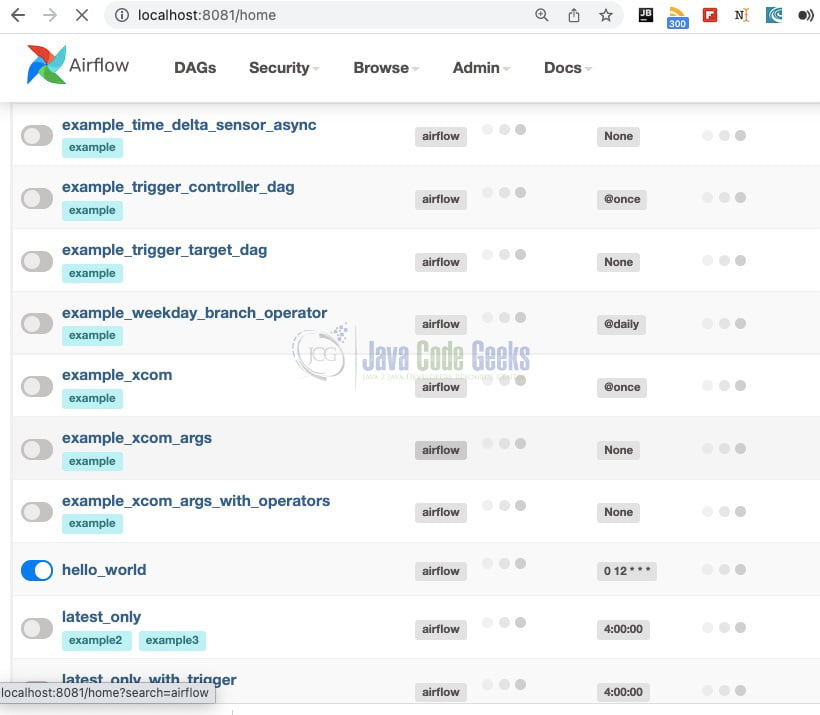

After logging in using the admin username and the password on the web application (http://localhost:8081), You can see the DAG created using the code above in the list of DAGs..

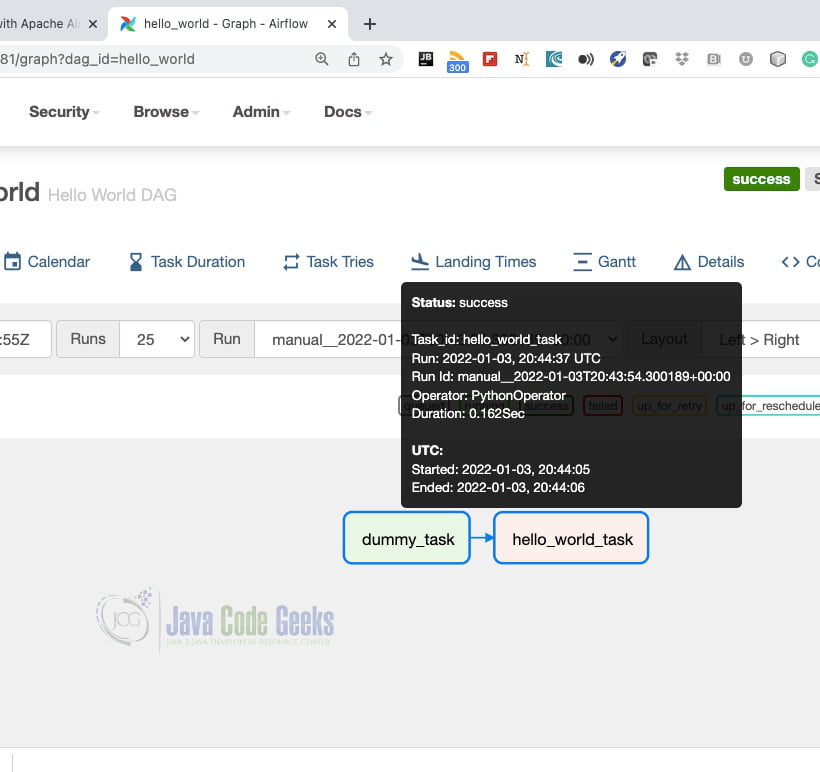

You can click on the hello_world DAG and execute the tasks. The output will be as shown in the graph view below.

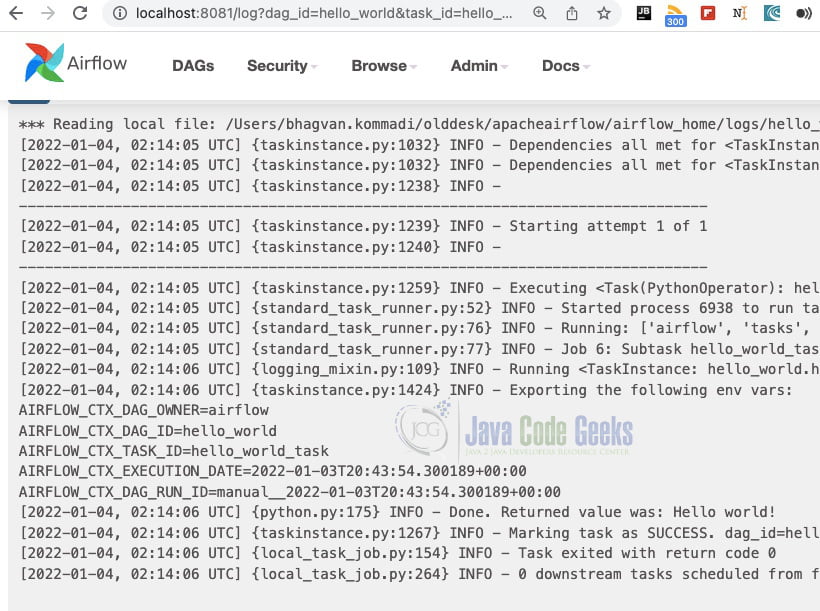

You can see the Hello World task log after clicking on the status popup. The log is shown below:

2.7 Use Cases

Apache Airflow is used for the following tasks:

- Sequencing

- Coordination

- Scheduling

- Complex Data operations

They are used in different applications in the areas mentioned below:

- Business Intelligence Applications

- Data Science Applications

- Machine Learning Models

- Big data Apps

Apache Airflow is extensible and is used for different use cases. The models use dependency-based declaration. The steps can be created in different units. Python can be used for creating pipelines. This helps in versioning and change management.

3. Download the Source Code

You can download the full source code of this example here: Getting Started with Apache Airflow