Hadoop Getmerge Example

In this example, we will look at merging the different files into one file in HDFS (Hadoop Distributed File System) and Apache Hadoop. Specifically the getmerge command.

1. Introduction

Merging is one of the tasks which is required a lot of times in Hadoop and most of the times, the number of files is large or the size of files are pretty large to be merged using text editors of any other kind of editors. That is why Hadoop and HDFS provides a way to merge files using command line. But before we go further, if you are new to Apache Hadoop and HDFS, it is recommended to go through the following articles to have a clear picture of what is happening (especially the last 3rd and 4th articles):

- The Hadoop Ecosystem Explained

- Big Data Hadoop Tutorial for Beginners

- Apache Hadoop Distributed File System Explained

- Apache Hadoop FS Commands Example

Once the basics are clear from the above articles, we can look into the getmerge command expalined in this example.

2. Merging Files

Merging multiple files comes IN handy frequently, sometimes the input files in HDFS are segregated and we want them to be one single file or couple of files instead of many small files. Occasionally the output of the MapReduce is multiple files when we are using multiple reducers and we want to merge them all, so that we can have a single output file from a MapReduce task.

In those cases, HDFS getmerge command is very useful, it can copy the files present in a particular path in HDFS, concatenate them and output a single file in the provided path within the filesystem.

Command Syntax:

hadoop fs -getmerge [-nl] <source-path> <local-system-destination-path>

Example:

hadoop fs -getmerge -nl /user/example-task/files /Desktop/merged-file.txt

Command Parameters:

getmerge command can take 3 parameters:

- Source Path: This is the HDFS path to the directory which contains all the files which need to be merged into one

- Destination Path: This is the local file path and name where the merged output file need to be stored.

- New Line (-nl): [-nl] is the optional parameter which if included will add a new line in the result file.

3. HDFS Example

In this section, we will go through the procedure step by step of how to merge the files using getmerge command and we will present the output.

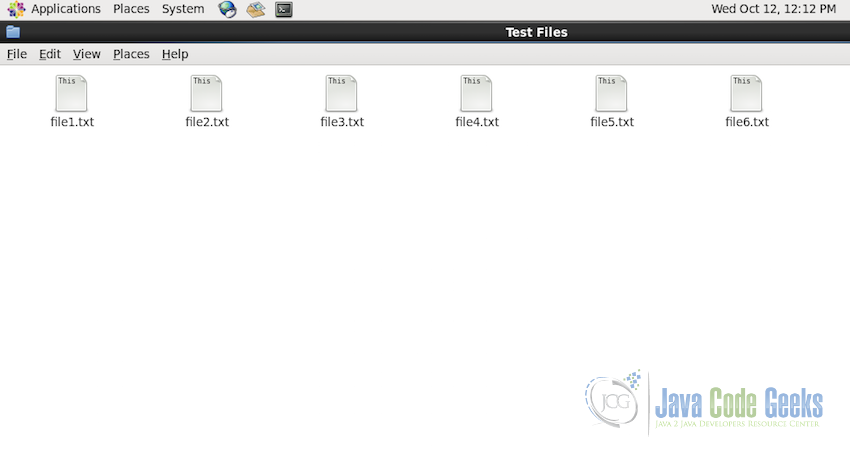

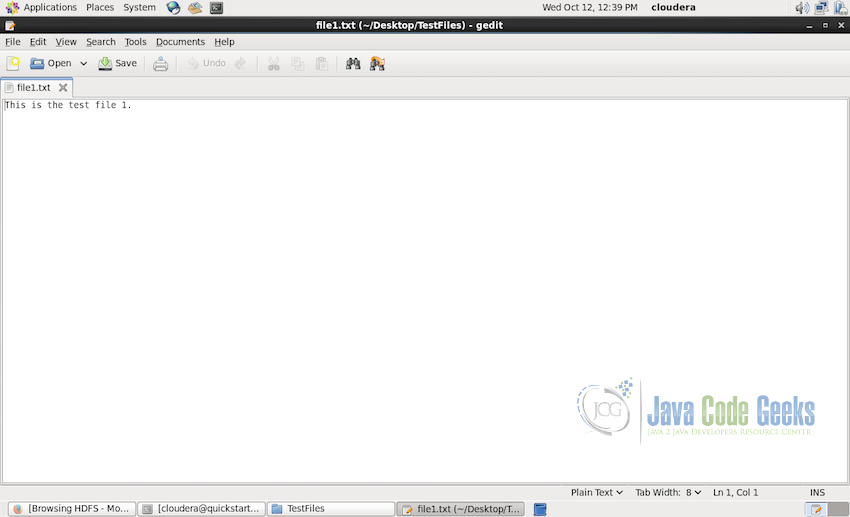

- Each file in the test folder is just a simple text file with only one line each containing the number of the file as shown in the screenshot below for the first file:

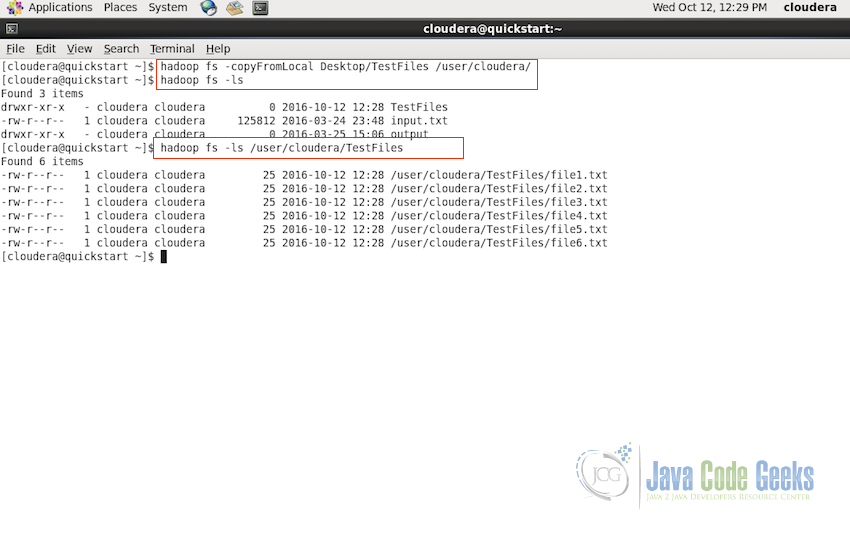

- The next step is to upload the files to Hadoop Distributed File System (HDFS). We will use the

copyFromLocalHDFS command for the purpose of copying the files from local filesystem to HDFS.hadoop fs -copyFromLocal Desktop/TestFiles /user/cloudera

This will copy the whole

TestFilesfolder with all the 6 files fromDesktop/TestFilesto/user/clouderaNext use the

lsas shown below to see if the folder is created and the files are copied to the destination successfullyhadoop fs -ls hadoop fs -ls /user/cloudera/TestFiles

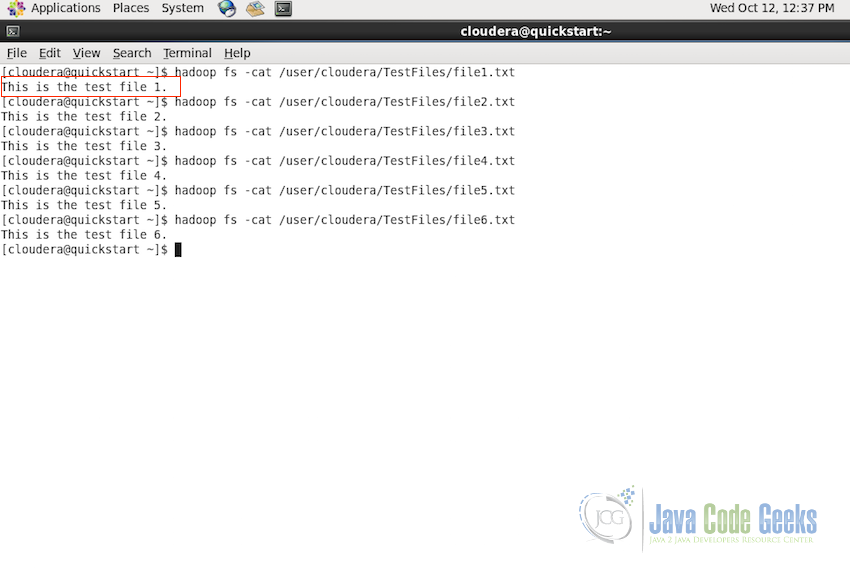

- We can also check the content of the files copied into HDFS using the

catcommand. This is just an option step to make sure the content is fine, you can skip this step if you feel so.hadoop fs -cat /user/cloudera/TestFiles/file1.txt

In the screenshoe below, content from all the 6 files is shown:

- Now its time to see the example of how

getmergecommand works. Execute the following command:hadoop fs -getmerge -nl /user/cloudera/TestFiles Desktop/MergedFile.txt

This command will merge all the 6 file present in the

/user/cloudera/TestFilesfolder to one file and will be saved toDesktop/MergedFile.txton the local file system. The merged file will have the new line characters appended at the end as we used the flag-nl>Following screenshot shows the command in action, if there is no response then the command is successful.

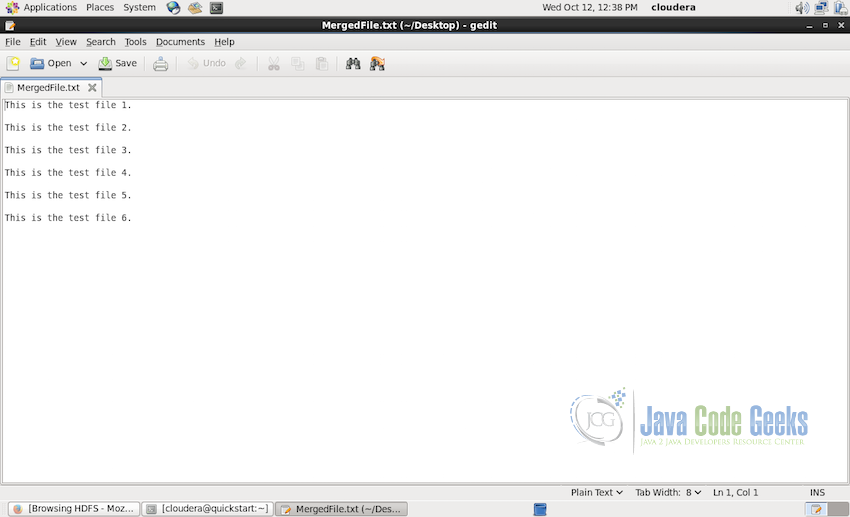

- Following screenshot shows the final output merged file from

Desktop/MergedFile.txt

4. Summary

In this example article, we looked into the HDFS command getmerge in details. We started by understanding the syntax of the command and all its parameters. We then had a step by step look at the process of how the files are merged in HDFS using the command.

To summarize, the getmerge command takes 3 parametrs i.e. source path, destination path and an option new line flag. We also used couple of another commands during the example process such as ls, cat and copyFromLocal.

Let me know in the comments if you need any more details or if anything is not clear.