Apache Spark Tutorial for Beginners

In this post, we feature a comprehensive Apache Spark Tutorial for Beginners. We will be looking at Apache Spark in detail, how is it different than Hadoop, and what are the different components that are bundled in Apache Spark.

Also, we will look at RDDs, which is the heart of Spark and a simple example of RDD in java.

Table Of Contents

1. Apache Spark Tutorial – Introduction

Apache Spark is a cluster computing technology, built for fast computations. It efficiently extends Hadoop’s MapReduce model to use it for multiple more types of computations like iterative queries and stream processing.

The main feature of Apache Spark is an in-memory computation which significantly increases the processing speed of the application.

Spark is built to work with a range of workloads like batch applications, interactive queries, iterative algorithms, and streaming data.

2. Spark vs Hadoop

Contrary to a popular belief, Spark is not a replacement for Hadoop. Spark is a processing engine, which functions on top of the Hadoop ecosystem.

Hadoop is used extensively by various industries to analyze huge amounts of data. Since Hadoop is distributed in nature and uses the Map-Reduce programming model, it is scalable, flexible, cost-effective and fault-tolerant. However, the main concern with Hadoop is the speed of processing with a large dataset as Hadoop is built to write intermediate results in HDFS and then read them back from disk which increases significantly when data has to be written or read back from disk multiple times during processing.

Spark is built on top of the Hadoop MapReduce model and extends it for interactive queries and real-time stream processing. Spark has its own cluster management and it uses Hadoop for storage and for processing. Since Spark uses memory to store intermediate processed data, it reduces the number of read/writes operations to disk which is what makes Spark almost 100 times faster than Hadoop. Spark also provides built-in APIs in Java, R, Python, and Scala.

3. Components of Spark

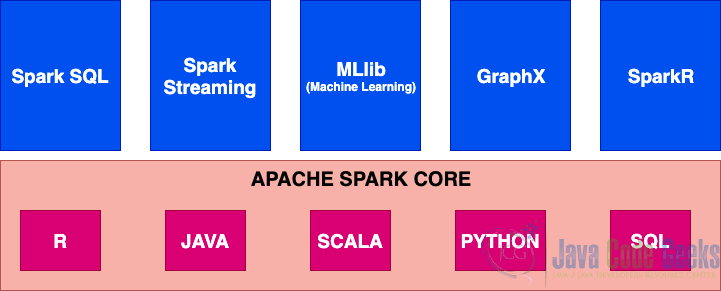

The following diagram shows the different components of Apache Spark.

Components of Spark

3.1 Apache Spark Core

All of the functionalities provided by Apache Spark are built on top of Apache Spark Core. Spark core is what provides the processing speed by providing in-memory computation.

RDD (Resilient Distributed Dataset) is at the heart of the Spark Core which provides distributed, in-memory processing of the dataset. Typically two types of actions are performed on RDDs:

- Transformation – This is a function that generates new RDD from existing RDD after performing some operations on the existing RDD.

- Action – RDDs are created from one another. But when we want to work on an actual dataset, then Action is used.

3.2 Apache Spark SQL

Spark SQL is a distributed framework for structured data processing. Data can be queried using either SQL or DataFrame API.

Spark SQL provides a uniform way of accessing data from various types of data sources like Hive, Avro, Parquet, ORC, JSON, JDBC, etc. we can even join data across these sources. Spark SQL supports HiveQLas well as Hive SerDes and UDFs, which makes it easy to work with existing Hive data warehouses.

In order to improve performance and scalability, Spark SQL includes a cost-based optimizer, columnar storage, and code generation to improve query performance. At the same time, it can scale to thousands of nodes and multi-hour queries using the Spark engine which provides mid query fault-tolerance.

3.2 Apache Spark Streaming

Spark streaming is an add-on to Spark core and provides scalable, fault-tolerant, performant processing of live streams. Spark streaming can access data from various types of streams like Kafka, Flume, Kinesis, etc. Data processed can be pushed to file systems, dashboards, or databases.

Spark uses micro-batching for real-time streaming. Micro-batching is a technique that allows a process to treat a stream of data as a sequence of small batches of data for processing. So Spark Streaming groups the live stream into small batches and then passes it to a batch system for processing.

3.3 Apache Spark MLlib

MLlib is Spark’s scalable machine learning library for both high-quality algorithm and high speed. The objective of the MLlib is to make machine learning scalable and easy. MLlib can be plugged into Hadoop workflows and Hadoop data sources.

MLlib contains high-quality algorithms that leverage iteration and can yield better results than the one-pass approximations sometimes used on MapReduce.

3.4 Apache Spark GraphX

Spark GraphX is an API for graphs and graph parallel computation. GraphX helps in building a view by combining graphs and collections data, transforming and enriching graphs with RDD and write custom interactive graph algorithms using Pregel API.

GraphX also optimizes the way in which vertex and edges can be represented when they are primitive data types. Clustering, classification, traversal, searching, and pathfinding is also possible in graphs.

3.5 Apache SparkR

SparkR is an R package that provides a light-weight frontend to use Apache Spark with R. Key component of SparkR is SparkR DataFrame. R also provides software facilities for data manipulation, calculation, and graphical display. Hence, the main idea behind SparkR was to explore different techniques to integrate the usability of R with the scalability of Spark.

4. Apache Spark RDD

RDD (Resilient Distributed Dataset) is an abstraction provided by Spark. It is a collection of data partitioned across nodes of the Spark cluster for processing. RDDs are created in two ways:

- By reading a file from either the Hadoop File System or any other Hadoop supported file system and transforming it.

- By parallelizing the existing collection in the driver program.

Users may request to persist RDD in memory for later use. In the case of node failures, RDDs can recover automatically.

RDD supports two types of operations: transformations and actions. Transformations create a new dataset from existing ones while actions, return a value to the driver program after performing computations on the dataset.

All transformations in RDD are lazy by default. This means that transformations are not computed immediately but a DAG (Directed Acyclic Graph) is maintained internally to track all the transformations and once an action is performed on that RDD, all the transformations are executed from DAG. This design improves the efficiency of the Spark.

Since transformations are not carried out immediately, whenever a new action is performed on RDD, transformations have to be re-run. To optimize performance, RDDs can be persisted in memory intermediately to avoid running transformations each time.

5. An Example with RDD

In this example, we will see how to read data from a file and count the occurrences of the word in the file.

5.1 Apache Spark Dependency

Before we start with the code, spark needs to be added as a dependency for application. We will add below dependency in pom.xml. Just spark-core is good for the example, in case you need to use other modules like SQL, Streaming, those dependencies should be added additionally.

org.apache.spark

spark-core_2.12

2.4.5

5.2 Building the code

Below are creating java spark context. We will need to set some configurations in spark like here we have defined that the master node is running on localhost and explicitly 2GB of memory has been allocated to the Spark process.

SparkConf sparkConf = new SparkConf().setAppName("Word Count").setMaster("local").set("spark.executor.memory","2g");

JavaSparkContext sc = new JavaSparkContext(sparkConf);File to be processed is read from the local file system first. After that, a list is created by splitting words in the file by space (assuming that no other delimiter is present).

JavaRDD inputFile = sc.textFile(filename);

JavaRDD wordsList = inputFile.flatMap(content -> Arrays.asList(content.split(" ")).iterator());Once the list is prepared, we need to process it to create a pair of words and a number of occurrences.

JavaPairRDD wordCount = wordsList.mapToPair(t -> new Tuple2(t, 1)).reduceByKey((x, y) -> x + y);

Here you must notice, that we have transformed RDD twice, first by breaking the RDD into a list of words and second, by creating PairRDD for words and their number of occurrences. Also notice that these transformations won’t do anything by themselves till we take some action on the RDD.

Once the pairs of words and their occurrences are ready, we write it back to disk to persist it.

wordCount.saveAsTextFile("Word Count");Finally, all this code is encompassed in the main method for execution. Here we are getting file name from arguments are passing it on for processing.

public static void main(String[] args) {

if (args.length == 0){

System.out.println("No file provided");

System.exit(0);

}

String filename = args[0];

....

}5.3 Run the Code

If you are using an IDE like Eclipse or IntelliJ then the code can be executed directly from IDE.

Here is a command to run it from the command line.

mvn exec:java -Dexec.mainClass=com.javacodegeek.examples.SparkExampleRDD -Dexec.args="input.txt"

5.4 Output

Once executed, a folder Word Count will be created in the current directory and you can look for the part-00000 file in the folder for the output

(queries,2) (stream,1) (increases,1) (Spark,3) (model,1) (it,1) (is,3) (The,1) (processing.,1) (computation,1) (built,2) (with,1) (MapReduce,1)

6. Download the Source Code

That was an Apache Spark Tutorial for beginners.

You can download the full source code of this example here: Apache Spark Tutorial for Beginners