Apache Kafka Tutorial for Beginners

This is a tutorial about Apache Kafka for beginners.

1. Introduction

Apache Kafka is a streaming process software platform developed by Apache Software Foundation in Scala and Java. It was originally developed by LinkedIn. Here are common terminologies used in Kafka:

- Kafka cluster – Zookeeper manages it via server discovery.

- Kafka broker – it is a Kafka server.

- Record – it is an immutable message record, which has an optional key, value, and timestamp.

- Producer – it produces streams of records.

- Consumer – it consumes streams of records.

- Topic – it groups a steam of records under the same name. Topic log is the topic’s disk storage and is broken into partitions. Each message in a partition is assigned a sequential id called offset.

Table Of Contents

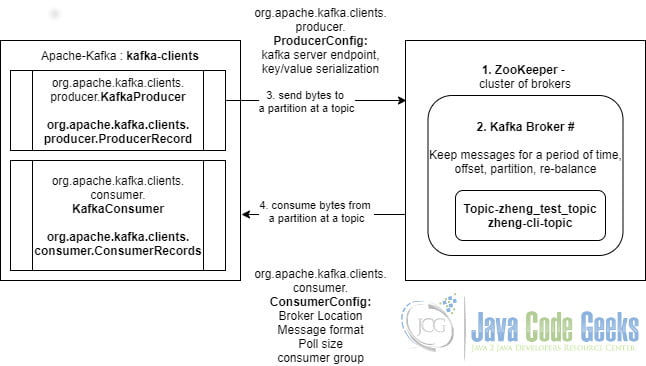

Figure 1 shows a Kafka client-server diagram which we will demonstrate in this tutorial.

In this tutorial, I will demonstrate the following items:

- How to install Kafka server in a Windows OS. This ties to the 1 and 2 components in the diagram.

- How to use Apache kafka-client producer and consumer APIs to connect step 3 and 4 in the diagram within a Spring boot application.

- How to use common Kafka CLI commands to view topics, messages, and consumer group information.

2. Technologies Used

The example code in this article was built and run using:

- Java 8

- Maven 3.3.9

- Eclipse Oxygen

- Junit 4.12

- Apache Kafka 2.6

3. Kafka Server

In this step, I will install the latest Kafka version 2.6 at Windows 10 computer. Please reference this link for details.

- Download from https://kafka.apache.org/downloads.

- Extract to the desired location. Mine is at C:\MaryZheng\kafka_2.12-2.6.0.

3.1 Configuration

In this step, I will explain two configuration files: zookeeper.properties and server.properties.

zookeeper.properties

# the directory where the snapshot is stored. dataDir=/tmp/zookeeper # the port at which the clients will connect clientPort=2181 # disable the per-ip limit on the number of connections since this is a non-production config maxClientCnxns=0 # Disable the adminserver by default to avoid port conflicts. # Set the port to something non-conflicting if choosing to enable this admin.enableServer=false

- Line 2: set the data directory to /tmp/zookeeper.

- Line 4: set the zookeeper client connecting port to 2181.

server.properties

############################# Server Basics ############################# # The id of the broker. This must be set to a unique integer for each broker. broker.id=0 # The number of threads that the server uses for receiving requests from the network and sending responses to the network num.network.threads=3 # The number of threads that the server uses for processing requests, which may include disk I/O num.io.threads=8 # The send buffer (SO_SNDBUF) used by the socket server socket.send.buffer.bytes=102400 # The receive buffer (SO_RCVBUF) used by the socket server socket.receive.buffer.bytes=102400 # The maximum size of a request that the socket server will accept (protection against OOM) socket.request.max.bytes=104857600 ############################# Log Basics ############################# # A comma separated list of directories under which to store log files log.dirs=/tmp/kafka-logs # The default number of log partitions per topic. More partitions allow greater # parallelism for consumption, but this will also result in more files across # the brokers. num.partitions=1 # The number of threads per data directory to be used for log recovery at startup and flushing at shutdown. # This value is recommended to be increased for installations with data dirs located in RAID array. num.recovery.threads.per.data.dir=1 ############################# Internal Topic Settings ############################# # The replication factor for the group metadata internal topics "__consumer_offsets" and "__transaction_state" # For anything other than development testing, a value greater than 1 is recommended to ensure availability such as 3. offsets.topic.replication.factor=1 transaction.state.log.replication.factor=1 transaction.state.log.min.isr=1 ############################# Log Retention Policy ############################# # The minimum age of a log file to be eligible for deletion due to age log.retention.hours=168 # The maximum size of a log segment file. When this size is reached a new log segment will be created. log.segment.bytes=1073741824 # The interval at which log segments are checked to see if they can be deleted according # to the retention policies log.retention.check.interval.ms=300000 ############################# Zookeeper ############################# zookeeper.connect=localhost:2181 # Timeout in ms for connecting to zookeeper zookeeper.connection.timeout.ms=18000 ############################# Group Coordinator Settings ############################# group.initial.rebalance.delay.ms=0

- Line 4: set the Kafka broker Id to 0.

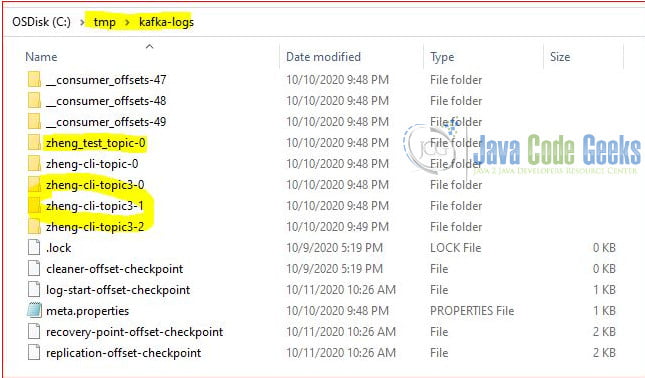

- Line 25: set the Kafka log files location. You can find the partitions for each topic here. See Figure 2 for an example.

- Line 30: set number of partition per topic. Can be overwritten via command line when creating a topic.

- Line 59: set the zookeeper connect endpoint.

3.2 Start Servers

In this step, I will start a Kafka broker. First, I will start zookeeper with the following command:

C:\MaryZheng\kafka_2.12-2.6.0\bin\windows>zookeeper-server-start.bat ..\..\config\zookeeper.properties

Then start a kafka server with the following command:

C:\MaryZheng\kafka_2.12-2.6.0\bin\windows>kafka-server-start.bat ..\..\config\server.properties

Monitor the server log to ensure that the server is started.

4. CLI Commands

Apache Kafka provides several utility commands to manage the topics, consumers, etc. In this step, I will demonstrate several CLI commands:

- How to check the Kafka version?

- How to list the topics?

- How to list the consumer groups?

- How to receive messages from a topic?

4.1 Check Version

You can check the Kafka version from the installed file. In this step, I will check both Kafka server and API versions with the following commands:

kafka-broker-api-versions.bat

C:\MaryZheng\kafka_2.12-2.6.0\bin\windows>kafka-broker-api-versions.bat --version 2.6.0 (Commit:62abe01bee039651) C:\MaryZheng\kafka_2.12-2.6.0\bin\windows> C:\MaryZheng\kafka_2.12-2.6.0\bin\windows>kafka-broker-api-versions.bat --bootstrap-server localhost:9092 --version 2.6.0 (Commit:62abe01bee039651) C:\MaryZheng\kafka_2.12-2.6.0\bin\windows>

4.2 List Topics

In this step, I will use kafka-topics command to list all the topics on the giving Kafka broker and create two topics.

kafka-topics.bat

C:\MaryZheng\kafka_2.12-2.6.0\bin\windows>kafka-topics.bat --list --zookeeper localhost:2181 __consumer_offsets zheng_test_topic C:\MaryZheng\kafka_2.12-2.6.0\bin\windows> C:\MaryZheng\kafka_2.12-2.6.0\bin\windows >kafka-topics.bat --create --zookeeper localhost:2181 --replication-factor 1 --partitions 1 --topic zheng-cli-topic Created topic zheng-cli-topic. C:\MaryZheng\kafka_2.12-2.6.0\bin\windows > C:\MaryZheng\kafka_2.12-2.6.0\bin\windows >kafka-topics.bat --create --zookeeper localhost:2181 --replication-factor 1 --partitions 3 --topic zheng-cli-topic3 Created topic zheng-cli-topic3. C:\MaryZheng\kafka_2.12-2.6.0\bin\windows >kafka-topics.bat --list --zookeeper localhost:2181 __consumer_offsets zheng-cli-topic zheng-cli-topic3 zheng_test_topic C:\MaryZheng\kafka_2.12-2.6.0\bin\windows >

- Line 2, 15: The

__consumer_offsetsis the internal topic.

4.3 List Consumer Groups

In this step, I will use kafka-consumer-groups command to list all or a specific group.

kafka-consumer-groups.bat

C:\MaryZheng\kafka_2.12-2.6.0\bin\windows>kafka-consumer-groups.bat --bootstrap-server localhost:9092 --all-groups --describe Consumer group 'zheng-test-congrp1' has no active members. GROUP TOPIC PARTITION CURRENT-OFFSET LOG-END-OFFSET LAG CONSUMER-ID HOST CLIENT-ID zheng-test-congrp1 zheng_test_topic 0 1 1 0 - - - C:\MaryZheng\kafka_2.12-2.6.0\bin\windows> C:\MaryZheng\kafka_2.12-2.6.0\bin\windows>kafka-consumer-groups.bat --bootstrap-server localhost:9092 --group zheng-test-congrp1 --describe Consumer group 'zheng-test-congrp1' has no active members. GROUP TOPIC PARTITION CURRENT-OFFSET LOG-END-OFFSET LAG CONSUMER-ID HOST CLIENT-ID zheng-test-congrp1 zheng_test_topic 0 1 1 0 - - - C:\MaryZheng\kafka_2.12-2.6.0\bin\windows> C:\MaryZheng\kafka_2.12-2.6.0\bin\windows >kafka-consumer-groups.bat --bootstrap-server localhost:9092 --all-groups --describe GROUP TOPIC PARTITION CURRENT-OFFSET LOG-END-OFFSET LAG CONSUMER-ID HOST CLIENT-ID zheng-test-congrp1 zheng-cli-topic 0 1 1 0 consumer-zheng-test-congrp1-1-09fe5f57-bd1a-4f5c-9609-7c1ec998a610 /192.168.29.225 consumer-zheng-test-congrp1-1 zheng-test-congrp1 zheng_test_topic 0 1 1 0 - - - C:\MaryZheng\kafka_2.12-2.6.0\bin\windows >

- Line 1: list all consumer group details.

- Line 3: No active consumer for this topic when executing this command.

- Line 10: List one group details.

- Line 21: Has one active consumer for zheng-cli-topic.

4.4 Receive Message from a Topic

In this step, I will use kafka-console-consumer command to receive messages from a giving topic at a giving broker.

kafka-console-consumer.bat

C:\MaryZheng\kafka_2.12-2.6.0\bin\windows>kafka-console-consumer.bat --bootstrap-server localhost:9092 --topic zheng-cli-topic3 --from-beginning

{"data", "some_value"}

C:\MaryZheng\kafka_2.12-2.6.0\bin\windows>kafka-console-consumer.bat --bootstrap-server localhost:9092 --topic zheng-cli-topic3 --from-beginning --property print.key=true

Key3 {"data", "some_value"}

Key2 {"data", "some_value"}

Line 3: –property print.key=true prints out the Kafka Record’s key.

5. Springboot Application

In this step, I will create a Sprint boot application which utilizes Apache kafka-client library to publish (consume) messages from (to) a topic.

5.1 Dependencies

I will include kafka-client in the pom.xml.

pom.xml

<?xml version="1.0" encoding="UTF-8"? >

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd" >

<modelVersion >4.0.0 </modelVersion >

<groupId >jcg.zheng.demo </groupId >

<artifactId >kafka-demo </artifactId >

<version >1.0.0-SNAPSHOT </version >

<packaging >jar </packaging >

<name >kafka-demo </name >

<description >kafka-demo Project </description >

<parent >

<groupId >org.springframework.boot </groupId >

<artifactId >spring-boot-starter-parent </artifactId >

<version >1.5.2.RELEASE </version >

<relativePath / >

</parent >

<properties >

<project.build.sourceEncoding >UTF-8 </project.build.sourceEncoding >

<project.encoding >UTF-8 </project.encoding >

<java-version >1.8 </java-version >

<maven.compiler.source >1.8 </maven.compiler.source >

<maven.compiler.target >1.8 </maven.compiler.target >

<kafka-clients.version >2.6.0 </kafka-clients.version >

</properties >

<dependencies >

<dependency >

<groupId >org.springframework.boot </groupId >

<artifactId >spring-boot-starter-web </artifactId >

</dependency >

<dependency >

<groupId >org.springframework.boot </groupId >

<artifactId >spring-boot-starter-test </artifactId >

<scope >test </scope >

</dependency >

<dependency >

<groupId >org.apache.kafka </groupId >

<artifactId >kafka-clients </artifactId >

<version >${kafka-clients.version} </version >

</dependency >

</dependencies >

<build >

<plugins >

<plugin >

<groupId >org.springframework.boot </groupId >

<artifactId >spring-boot-maven-plugin </artifactId >

</plugin >

</plugins >

</build >

</project >

5.2 Springboot Application

In this step, I will create an Application class which annotates with @SpringBootApplication.

Application.java

package jcg.zheng.demo;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

@SpringBootApplication

public class Application {

public static void main(String[] args) {

SpringApplication.run(Application.class, args);

}

}5.3 Spring Configuration

In this step, I will create an application.properties which includes Kafka server and consumer group id.

application.properties

========================================================= ==== KAFKA Configuration ==== ========================================================= jcg.zheng.bootStrapServers=localhost:9092 jcg.zheng.consumer.group=zheng-test-congrp1

5.4 Kafka Configuration

In this step, I will create a KafkaConfig.java class which annotates with @Configuration and creates two Spring beans.

KafkaConfig.java

package jcg.zheng.demo.kafka;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

@Configuration

public class KafkaConfig {

@Value("${jcg.zheng.bootStrapServers}")

private String bootStrapServers;

@Value("${jcg.zheng.consumer.group}")

private String consumerGroup;

@Bean

public KafkaProducerFactory kafkaProducerFactory() {

return new KafkaProducerFactory(bootStrapServers);

}

@Bean

public KafkaConsumerFactory kafkaConsumerFactory() {

return new KafkaConsumerFactory(bootStrapServers, consumerGroup);

}

}

5.5 Kafka Consumer Factory

In this step, I will create a KafkaConsumerFactory.java which constructs a org.apache.kafka.clients.consumer.Consumer instance with the desired consumer configuration. It has three methods:

KafkaConsumerFactory(String bootStrapServers, String consumerGroupId)– the constructor to create an object with given Kafka broker and consumer group id.subscribe(String topic)– subscribe to the given topic and return aConsumerobject.destroy()– close the consumer.

KafkaConsumerFactory.java

package jcg.zheng.demo.kafka;

import java.util.Collections;

import java.util.Properties;

import org.apache.kafka.clients.consumer.Consumer;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.clients.consumer.KafkaConsumer;

import org.springframework.beans.factory.DisposableBean;

public class KafkaConsumerFactory implements DisposableBean {

private Consumer <String, String > consumer;

public KafkaConsumerFactory(String bootStrapServers, String consumerGroupId) {

Properties props = new Properties();

props.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, bootStrapServers);

props.put(ConsumerConfig.GROUP_ID_CONFIG, consumerGroupId);

props.put(ConsumerConfig.MAX_POLL_RECORDS_CONFIG, 1);

props.put(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG, "earliest");

props.put(ConsumerConfig.ENABLE_AUTO_COMMIT_CONFIG, false);

props.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG,

"org.apache.kafka.common.serialization.StringDeserializer");

props.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG,

"org.apache.kafka.common.serialization.StringDeserializer");

consumer = new KafkaConsumer < >(props);

}

public Consumer <String, String > subscribe(String topicName) {

consumer.subscribe(Collections.singletonList(topicName));

return consumer;

}

@Override

public void destroy() throws Exception {

consumer.close();

}

}

5.6 Kafka Producer Factory

In this step, I will create a KafkaProducerFactory.java which creates org.apache.kafka.client.producer.KafkaProducer instance with the desired configuration. It has three methods:

KafkaProducerFactory(String bootStrapServers)– it is a constructor which creates aProducerinstance with a Kafka broker.send(ProducerRecord producerRecord)– sendsProducerRecorddestroy()– close producer when bean is destroyed.

KafkaProducerFactory.java

package jcg.zheng.demo.kafka;

import java.util.Properties;

import java.util.concurrent.Future;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.Producer;

import org.apache.kafka.clients.producer.ProducerConfig;

import org.apache.kafka.clients.producer.ProducerRecord;

import org.apache.kafka.clients.producer.RecordMetadata;

import org.springframework.beans.factory.DisposableBean;

public class KafkaProducerFactory implements DisposableBean {

private Producer <String, String > producer;

public KafkaProducerFactory(String bootStrapServers) {

Properties props = new Properties();

props.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG, bootStrapServers);

props.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG,

"org.apache.kafka.common.serialization.StringSerializer");

props.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG,

"org.apache.kafka.common.serialization.StringSerializer");

producer = new KafkaProducer < >(props);

}

public Future <RecordMetadata > send(ProducerRecord <String, String > producerRecord) {

return producer.send(producerRecord);

}

@Override

public void destroy() throws Exception {

producer.close();

}

}

Note: after steps 5.1 – 5.6, the application is ready to publish and consume messages from a Kafka broker.

5.7 Kafka Consumer

In this step, I will create a KafkaMsgConsumer.java which will process the message based on the business requirements.

KafkaMsgConsumer.java

package jcg.zheng.demo.kafka.app;

import java.time.Duration;

import javax.annotation.Resource;

import org.apache.kafka.clients.consumer.Consumer;

import org.apache.kafka.clients.consumer.ConsumerRecords;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.stereotype.Component;

import jcg.zheng.demo.kafka.KafkaConsumerFactory;

@Component

public class KafkaMsgConsumer {

private static final Logger LOGGER = LoggerFactory.getLogger(KafkaMsgConsumer.class);

@Resource

private KafkaConsumerFactory kafkaConsumerFactory;

public void onMessage(String topic) {

LOGGER.info("onMessage for topic=" + topic);

Consumer <String, String > msgConsumer = kafkaConsumerFactory.subscribe(topic);

try {

while (true) {

ConsumerRecords <String, String > consumerRecord = msgConsumer

.poll(Duration.ofMillis(1000));

LOGGER.info("consumerRecord.count=" + consumerRecord.count());

consumerRecord.forEach(record - > {

LOGGER.info("Message Key=" + record.key());

LOGGER.info("Message Value=" + record.value());

LOGGER.info("Message Partition=" + record.partition());

LOGGER.info("Message Offset=" + record.offset());

});

msgConsumer.commitAsync();

}

} finally {

msgConsumer.commitSync();

msgConsumer.close();

}

}

}5.8 Kafka Producer

In this step, I will create a KafkaMsgProducer.java which publish the message to Kafka based on business requirements.

KafkaMsgProducer.java

package jcg.zheng.demo.kafka.app;

import javax.annotation.Resource;

import org.apache.kafka.clients.producer.ProducerRecord;

import org.springframework.stereotype.Component;

import jcg.zheng.demo.kafka.KafkaProducerFactory;

@Component

public class KafkaMsgProducer {

@Resource

private KafkaProducerFactory kafkaProducerFactory;

public void publishMessage(String topic, String message, String key) {

if ((topic == null) || (topic.isEmpty()) || (message == null) || (message.isEmpty())) {

return;

}

if (key == null) {

kafkaProducerFactory.send(new ProducerRecord <String, String >(topic, message));

} else {

kafkaProducerFactory.send(new ProducerRecord <String, String >(topic, key, message));

}

}

}6. Junit Tests

6.1 ApplicationTests

In this step, I will create an ApplicationTest.java which annotates with @SpringBootApplication.

ApplicationTests.java

package jcg.zheng.demo;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

@SpringBootApplication

public class ApplicationTests {

public static void main(String[] args) {

SpringApplication.run(ApplicationTests.class, args);

}

}

6.2 KafkaMsgProducerTest

In this step, I will create a KafkaMsgProducerTest.java which has two test methods to publish to two topics.

KafkaMsgProducerTest.java

package jcg.zheng.demo.kafka.app;

import org.junit.Test;

import org.junit.runner.RunWith;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.test.context.SpringBootTest;

import org.springframework.test.context.junit4.SpringRunner;

import jcg.zheng.demo.ApplicationTests;

import jcg.zheng.demo.kafka.app.KafkaMsgProducer;

@RunWith(SpringRunner.class)

@SpringBootTest(classes = ApplicationTests.class)

public class KafkaMsgProducerTest {

@Autowired

private KafkaMsgProducer pub;

String testMsg = "{\"data\", \"dummy_value 1\"}";

@Test

public void publichMessage_zheng_test_topic() {

pub.publishMessage("zheng_test_topic", testMsg, "Key1");

}

@Test

public void publichMessage_zheng_cli_topic() {

pub.publishMessage("zheng-cli-topic3", testMsg, "Key5");

}

}

6.3 KafkaMsgConsumerTest

In this step, I will create a KafkaMsgConsumerTest.java which includes two tests to consume from two topics.

KafkaMsgConsumerTest.java

package jcg.zheng.demo.kafka.app;

import org.junit.Test;

import org.junit.runner.RunWith;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.test.context.SpringBootTest;

import org.springframework.test.context.junit4.SpringRunner;

import jcg.zheng.demo.ApplicationTests;

import jcg.zheng.demo.kafka.app.KafkaMsgConsumer;

@RunWith(SpringRunner.class)

@SpringBootTest(classes = ApplicationTests.class)

public class KafkaMsgConsumerTest {

@Autowired

private KafkaMsgConsumer consumer;

@Test

public void consume_zheng_test_topic() {

consumer.onMessage("zheng_test_topic");

}

@Test

public void consume_cli_topic() {

consumer.onMessage("zheng-cli-topic3");

}

}

7. Demo

In this step, I will publish and consume messages within a spring boot application. Please make sure that the Kafka server is up and running.

7.1 Publisher Test Demo

Start the publisher test and capture the log. Repeat for several messages.

KafkaMsgProducerTest log

2020-10-11 09:04:19.022 INFO 112492 --- [ main] j.z.demo.kafka.app.KafkaMsgProducerTest : Started KafkaMsgProducerTest in 11.147 seconds (JVM running for 16.995) 2020-10-11 09:04:19.361 INFO 112492 --- [ main] j.zheng.demo.kafka.app.KafkaMsgProducer : Sending message with key: Key5

7.2 Consumer Test Demo

Start the consumer test and capture the logs.

KafkaMsgConsumerTest log

2020-10-11 09:03:19.048 INFO 118404 --- [ main] j.z.demo.kafka.app.KafkaMsgConsumerTest : Started KafkaMsgConsumerTest in 10.723 seconds (JVM running for 14.695)

2020-10-11 09:03:19.540 INFO 118404 --- [ main] j.zheng.demo.kafka.app.KafkaMsgConsumer : onMessage for topic=zheng-cli-topic3

2020-10-11 09:03:19.550 INFO 118404 --- [ main] o.a.k.clients.consumer.KafkaConsumer : [Consumer clientId=consumer-zheng-test-congrp1-1, groupId=zheng-test-congrp1] Subscribed to topic(s): zheng-cli-topic3

2020-10-11 09:03:19.683 INFO 118404 --- [ main] org.apache.kafka.clients.Metadata : [Consumer clientId=consumer-zheng-test-congrp1-1, groupId=zheng-test-congrp1] Cluster ID: nclNd8qBRga9PUDe8Y_WqQ

2020-10-11 09:03:19.719 INFO 118404 --- [ main] o.a.k.c.c.internals.AbstractCoordinator : [Consumer clientId=consumer-zheng-test-congrp1-1, groupId=zheng-test-congrp1] Discovered group coordinator host.docker.internal:9092 (id: 2147483647 rack: null)

2020-10-11 09:03:19.758 INFO 118404 --- [ main] o.a.k.c.c.internals.AbstractCoordinator : [Consumer clientId=consumer-zheng-test-congrp1-1, groupId=zheng-test-congrp1] (Re-)joining group

2020-10-11 09:03:19.878 INFO 118404 --- [ main] o.a.k.c.c.internals.AbstractCoordinator : [Consumer clientId=consumer-zheng-test-congrp1-1, groupId=zheng-test-congrp1] Join group failed with org.apache.kafka.common.errors.MemberIdRequiredException: The group member needs to have a valid member id before actually entering a consumer group.

2020-10-11 09:03:19.879 INFO 118404 --- [ main] o.a.k.c.c.internals.AbstractCoordinator : [Consumer clientId=consumer-zheng-test-congrp1-1, groupId=zheng-test-congrp1] (Re-)joining group

2020-10-11 09:03:19.941 INFO 118404 --- [ main] o.a.k.c.c.internals.ConsumerCoordinator : [Consumer clientId=consumer-zheng-test-congrp1-1, groupId=zheng-test-congrp1] Finished assignment for group at generation 12: {consumer-zheng-test-congrp1-1-117dc6d2-db20-4611-85cc-98c0cc813246=Assignment(partitions=[zheng-cli-topic3-0, zheng-cli-topic3-1, zheng-cli-topic3-2])}

2020-10-11 09:03:19.974 INFO 118404 --- [ main] o.a.k.c.c.internals.AbstractCoordinator : [Consumer clientId=consumer-zheng-test-congrp1-1, groupId=zheng-test-congrp1] Successfully joined group with generation 12

2020-10-11 09:03:19.981 INFO 118404 --- [ main] o.a.k.c.c.internals.ConsumerCoordinator : [Consumer clientId=consumer-zheng-test-congrp1-1, groupId=zheng-test-congrp1] Notifying assignor about the new Assignment(partitions=[zheng-cli-topic3-0, zheng-cli-topic3-1, zheng-cli-topic3-2])

2020-10-11 09:03:19.990 INFO 118404 --- [ main] o.a.k.c.c.internals.ConsumerCoordinator : [Consumer clientId=consumer-zheng-test-congrp1-1, groupId=zheng-test-congrp1] Adding newly assigned partitions: zheng-cli-topic3-2, zheng-cli-topic3-0, zheng-cli-topic3-1

2020-10-11 09:03:20.039 INFO 118404 --- [ main] o.a.k.c.c.internals.ConsumerCoordinator : [Consumer clientId=consumer-zheng-test-congrp1-1, groupId=zheng-test-congrp1] Found no committed offset for partition zheng-cli-topic3-2

2020-10-11 09:03:20.040 INFO 118404 --- [ main] o.a.k.c.c.internals.ConsumerCoordinator : [Consumer clientId=consumer-zheng-test-congrp1-1, groupId=zheng-test-congrp1] Found no committed offset for partition zheng-cli-topic3-0

2020-10-11 09:03:20.040 INFO 118404 --- [ main] o.a.k.c.c.internals.ConsumerCoordinator : [Consumer clientId=consumer-zheng-test-congrp1-1, groupId=zheng-test-congrp1] Found no committed offset for partition zheng-cli-topic3-1

2020-10-11 09:03:20.246 INFO 118404 --- [ main] o.a.k.c.c.internals.SubscriptionState : [Consumer clientId=consumer-zheng-test-congrp1-1, groupId=zheng-test-congrp1] Resetting offset for partition zheng-cli-topic3-2 to offset 0.

2020-10-11 09:03:20.248 INFO 118404 --- [ main] o.a.k.c.c.internals.SubscriptionState : [Consumer clientId=consumer-zheng-test-congrp1-1, groupId=zheng-test-congrp1] Resetting offset for partition zheng-cli-topic3-0 to offset 0.

2020-10-11 09:03:20.249 INFO 118404 --- [ main] o.a.k.c.c.internals.SubscriptionState : [Consumer clientId=consumer-zheng-test-congrp1-1, groupId=zheng-test-congrp1] Resetting offset for partition zheng-cli-topic3-1 to offset 0.

2020-10-11 09:03:20.336 INFO 118404 --- [ main] j.zheng.demo.kafka.app.KafkaMsgConsumer : consumerRecord.count=1

2020-10-11 09:03:20.340 INFO 118404 --- [ main] j.zheng.demo.kafka.app.KafkaMsgConsumer : Message Key=Key3

2020-10-11 09:03:20.340 INFO 118404 --- [ main] j.zheng.demo.kafka.app.KafkaMsgConsumer : Message Value={"data", "some_value"}

2020-10-11 09:03:20.340 INFO 118404 --- [ main] j.zheng.demo.kafka.app.KafkaMsgConsumer : Message Partition=2

2020-10-11 09:03:20.340 INFO 118404 --- [ main] j.zheng.demo.kafka.app.KafkaMsgConsumer : Message Offset=0

2020-10-11 09:03:20.387 INFO 118404 --- [ main] j.zheng.demo.kafka.app.KafkaMsgConsumer : consumerRecord.count=1

2020-10-11 09:03:20.388 INFO 118404 --- [ main] j.zheng.demo.kafka.app.KafkaMsgConsumer : Message Key=Key2

2020-10-11 09:03:20.388 INFO 118404 --- [ main] j.zheng.demo.kafka.app.KafkaMsgConsumer : Message Value={"data", "some_value"}

2020-10-11 09:03:20.389 INFO 118404 --- [ main] j.zheng.demo.kafka.app.KafkaMsgConsumer : Message Partition=1

2020-10-11 09:03:20.391 INFO 118404 --- [ main] j.zheng.demo.kafka.app.KafkaMsgConsumer : Message Offset=0

2020-10-11 09:03:20.401 INFO 118404 --- [ main] j.zheng.demo.kafka.app.KafkaMsgConsumer : consumerRecord.count=1

2020-10-11 09:03:20.402 INFO 118404 --- [ main] j.zheng.demo.kafka.app.KafkaMsgConsumer : Message Key=Key4

2020-10-11 09:03:20.403 INFO 118404 --- [ main] j.zheng.demo.kafka.app.KafkaMsgConsumer : Message Value={"data", "dummy_value 1"}

2020-10-11 09:03:20.404 INFO 118404 --- [ main] j.zheng.demo.kafka.app.KafkaMsgConsumer : Message Partition=1

2020-10-11 09:03:20.405 INFO 118404 --- [ main] j.zheng.demo.kafka.app.KafkaMsgConsumer : Message Offset=1

2020-10-11 09:03:21.418 INFO 118404 --- [ main] j.zheng.demo.kafka.app.KafkaMsgConsumer : consumerRecord.count=0

8. Summary

In this tutorial, I showed how to install and start a Kafka server; how to check the topic with command line; and how to build a spring boot application with kafka-client library to publish and consume messages.

9. Download the Source Code

That was an Apache Kafka tutorial for beginners.

You can download the full source code of this example here: Apache Kafka Tutorial for Beginners