Hadoop Kerberos Authentication Tutorial

In this tutorial we will see how to secure the Hadoop Cluster and implement authentication in the cluster. Kerberos is an authentication implementation which is a standard used to implement security in the Hadoop cluster.

1. Introduction

Kerberos is the standard and most widely used way of implementing the user authentication in the Hadoop cluster. It is the network authentication protocol developed at MIT. Kerberos is designed to provide authentication for client-server application and for that it uses secret key-cryptography.

Kerberos is used by many commercial products and is available for commercial purposes also. A free version of this implementation is also made available by MIT and is available on MIT Kerberos under copyright permission license. Source code is also freely available so that anyone can go through the code and make sure about the security implementation.

The default Hadoop Cluster authentication is not secure enough and as it is designed to trust all the user credentials provided which is quite vulnerable. To overcome this vulnerability Kerberos is introduced in Hadoop Ecosystem which provides a secure way to verify the identity of users.

2. Key Terms

There are few typical terminologies used in any kind of security implementation and Kerberos Identity Verification also make use of it.

2.1 Principal:

An identity that needs to be verified is referred to as a principal. It is not necessarily just the users, there can be multiple types of identities.

Principals are usually divided into two categories:

- User Principals

- Service Principals

User Principal Names (UPN)

User principal Names refers to users, these users are similar to users in an operating system.

Service Principal Names (SPN)

Service Principal Names refer to services accessed by a user such as a database.

2.2 Realm

A realm in Kerberos refers to an authentication administrative domain. Principals are assigned to specific realms in order to demarcate boundaries and simplify administration.

2.3 Key Distribution Center (KDC)

Key Distribution Centre contains all the information regarding principals and realms. Security of Key Distribution Centre in itself is very important because if KDC is compromised the entire realm will be compromised.

Key Distribution Center consists of three parts:

- The Kerberos Database: The kerberos database is the repository of all principals and realms.

- Authentication Service (AS): The AS is used to grant tickets when clients make a request to the AS.

- Ticket Granting Service(TGS): The TGS validates tickets and issues service tickets.

3. Kerberos in Hadoop

To implement kerberos security and authentication in Hadoop we need to configure Hadoop to work with Kerberos, we will see below how to do that, follow the step by step guide.

3.1 How to create a Key Distribution System

We will follow the steps mentioned below to create a KDS:

- To start with, we first need to create a key distribution center (KDC) for the Hadoop cluster. It is advisable to use a separate KDC for Hadoop which will be exclusive for Hadoop and should not be used by any other application.

- The second step is to create service principals. We will create separate service principals for each of the Hadoop services i.e. mapreduce, yarn and hdfs.

- The third step is to create Encrypted Kerberos Keys (Keytabs) for each service principal.

- The fourth step is to distribute keytabs for service principals to each of the cluster nodes.

- The fifth and the last step is configuring all services to rely on kerberos authentication.

3.2 Implementing Kerberos Key Distribution System

Before implementing Kerberos authentication let us test run some commands on Hadoop and we will try the same commands after successfully implementing kerberos; then it will not be possible to run commands without authentication.

hadoop fs -mkdir /usr/local/kerberos

The kerberos server must be installed on a server with a fully qualified domain name (FQDN) because the domain name is used as the realm name. In this tutorial lets assume we have an FQDN which is EXAMPLE.COM so substitute this with FQDN that points to your server. Let’s start with installing client and server. In a cluster we designate one node to act as KDC server and the other nodes are clients from where we can request tickets.

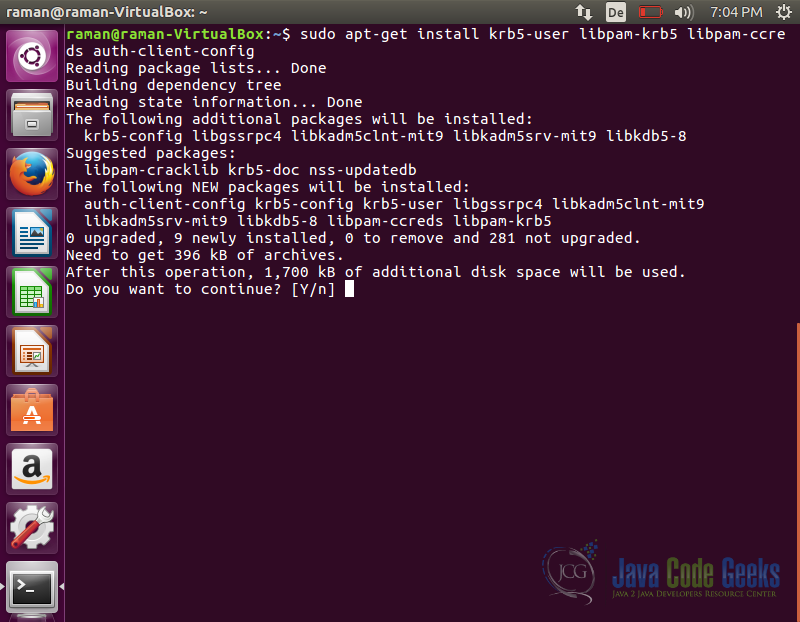

Install Client

The command below installs the client. The client will be used to request for tickets from KDC.

sudo apt-get install krb5-user libpam-krb5 libpam-ccreds auth-client-config

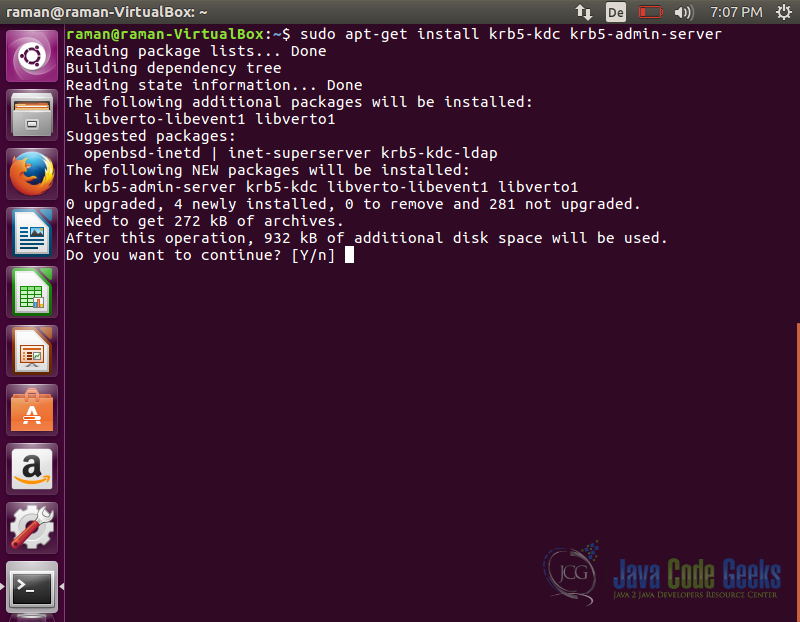

Install Kerberos Admin Server

To install the server and KDC use the following command

sudo apt-get install krb5-kdc krb5-admin-server

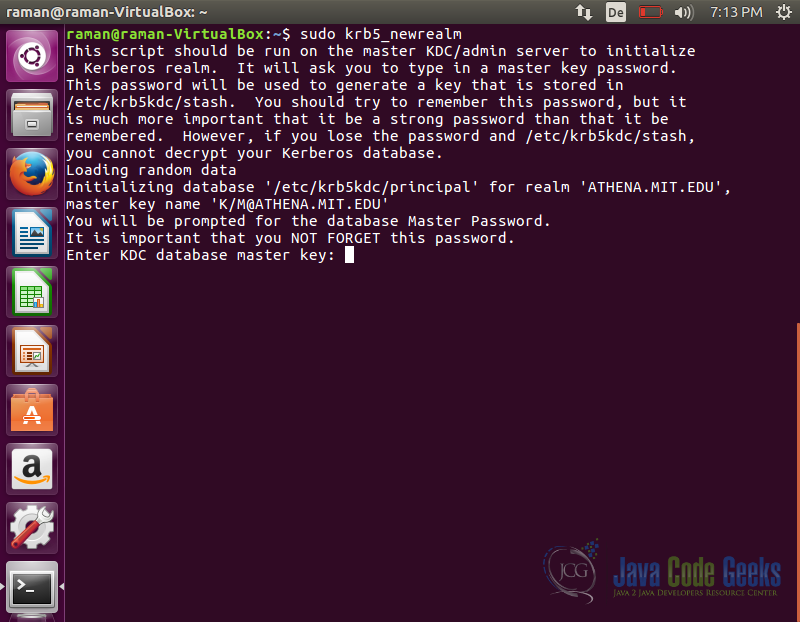

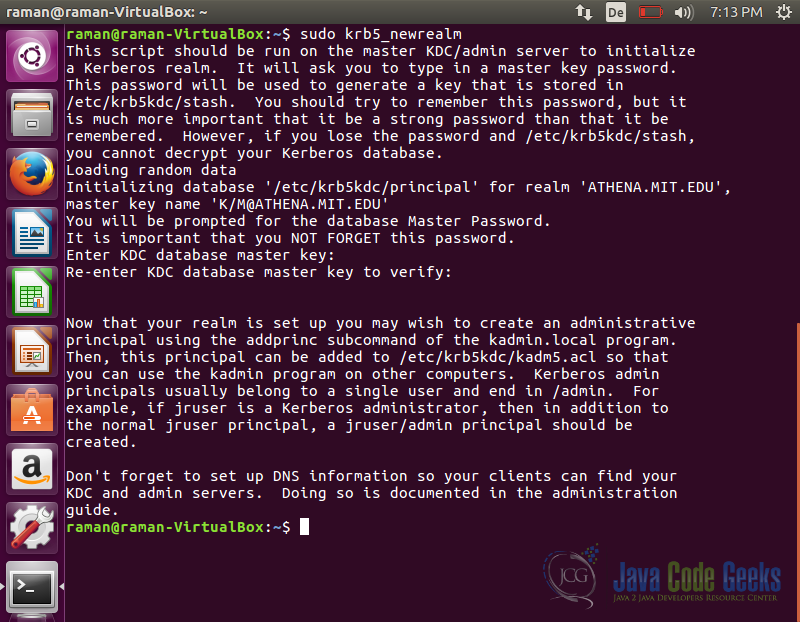

Create Realm

Run the below command to initialize a new realm on the machine that will act as KDC server.

sudo krb5_newrealm

Enter a password when prompted and it will finish the installation.

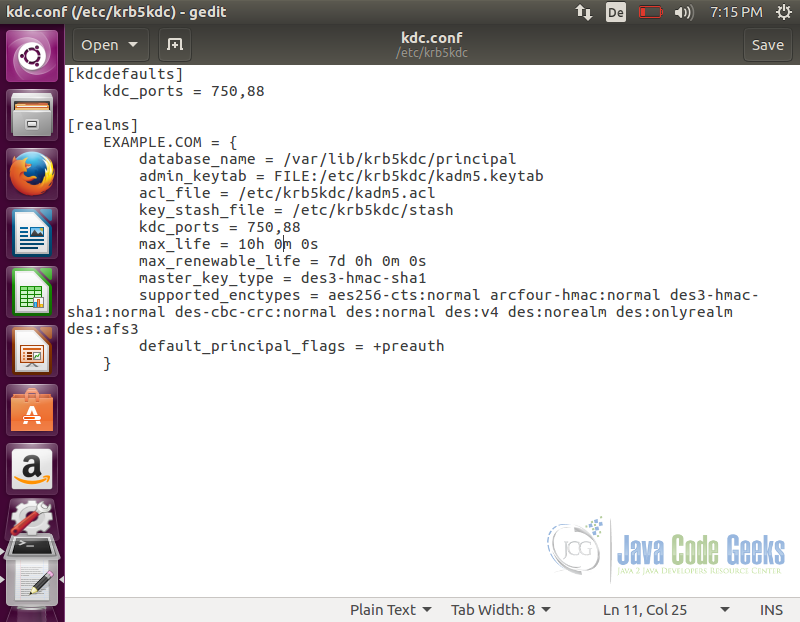

Once installation is complete we edit kdc.conf file. The file is present on the path /etc/krb5kdc/kdc.conf. We will need to set proper configuration settings in this file. Open the file using the command below:

sudo gedit /etc/krb5kdc/kdc.conf

Settings of interest are where KDC data files will be located, period tickets remain valid and the realm name(set it to your fully qualified domain name). You should avoid changing other configurations unless you are an advance user and are sure of the impact.

The next step is to create a KDC database for our installation. Run the following command

kdb5_util create -r EXAMPLE.COM -s

This will create a database and a stash file to store the master key to our database. The master key is used to encrypt the database to improve security. You can choose the master key of your choice.

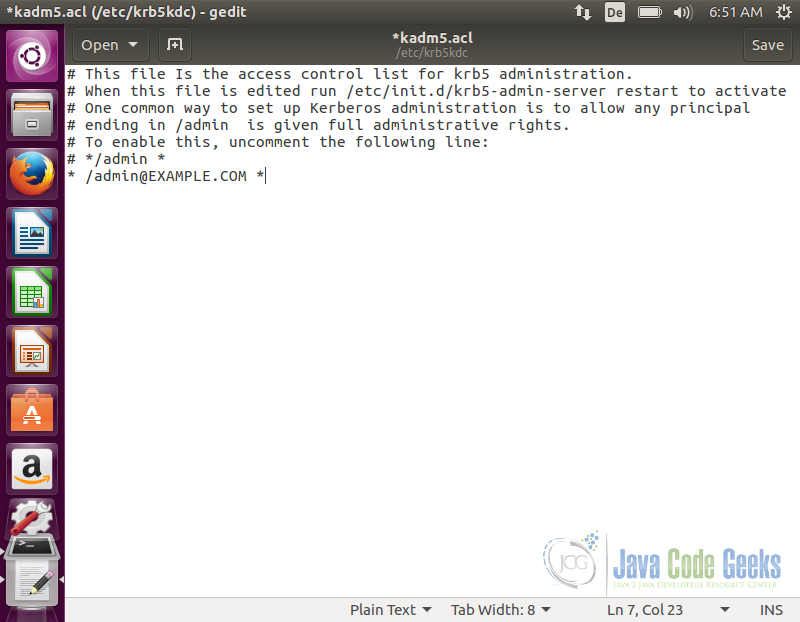

After the database has been created we edit acl file to include the kerberos principal of administrators. This file identifies principals with administrative rights on the kerberos database. The location of this file is set in kdc.conf file. Open acl file by running the following command:

sudo gedit /etc/krb5kdc/kadm5.acl

In this file, add the line */admin@EXAMPLE.COM *. This will grants all the possible privileges to users which belong to admin principal instance.

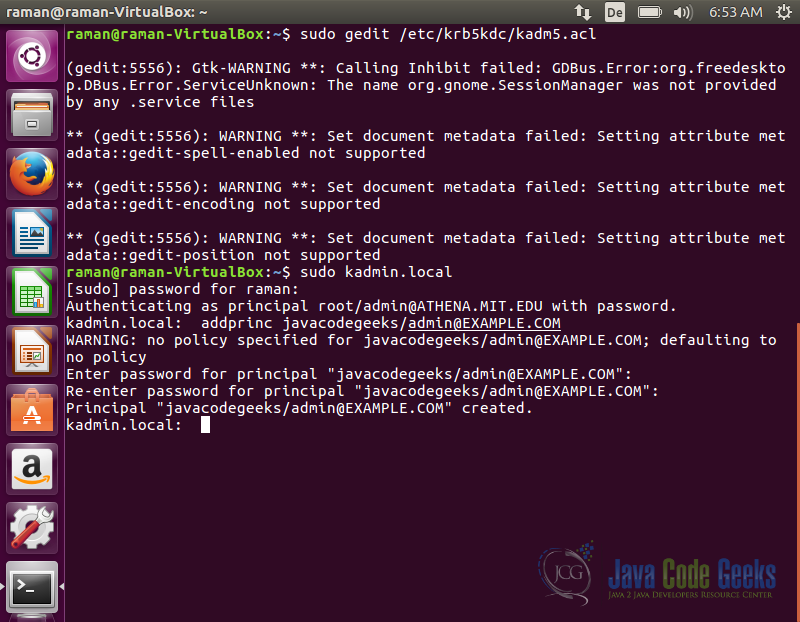

We can use the kadmin.local utility to add administrators users to the kerberos database. To add a new administrator user run the following command:

sudo kadmin.local

It will ask for a user, when prompted enter the following command

addprinc javacodegeeks/admin@EXAMPLE.COM

This will add the javacodegeeks user as an administrator of kerberos database.

Now we are ready to start the kerberos services. Start kerberos services by running the commands below:

service krb5kdc start service kadmin start

Above commands will start the Kerbos Key Distribution Systema and Kerberos Admin services.

Now if you would like to reconfigure kerberos afresh in future to change the realm name and other settings use this command:

sudo dpkg-reconfigure krb5-kdc.

The kerberos client configuration settings are stored in the directory /etc/krb5.conf. We edit this file to point the client to the correct KDC. Change the default realm to EXAMPLE.COM. Add the realm by including the lines below in realms:

sudo gedit /etc/krb5.conf

Once krb5.conf file is open, add the following lines:

EXAMPLE.COM = {

kdc = kerberos.example.com

admin_server = kerberos.example.com

}

Add the lines below under domain_realm

.example.com = EXAMPLE.COM eduonix.com = EXAMPLE.COM

With kerberos authentication all setup, we will also need to edit SSH configuration to allow kerberos authentication to be used by the cluster.

sudo gedit /etc/ssh/sshd_config

Once the SSH configuration file is open, add the below lines in the file:

# Kerberos options KerberosAuthentication yes KerberosGetAFSToken no KerberosOrLocalPasswd yes KerberosTicketCleanup

We are just nearing the end of setting up the Kerberos, one last thing to do now is to edit hadoop core-site.xml file to enable kerberos authentication. This has to be done on all the nodes in the cluster.

sudo gedit /usr/local/hadoop/hadoop-2.7.1/etc/hadoop/core-site.xml

Once the file is open, make the following changes:

<property> <name>hadoop.security.authentication</name> <value>kerberos</value> </property> <property> <name>hadoop.security.authorization</name> <value>true</value> </property>

Final Test

The first thing we did before starting to implement kerberos is to try creating a new folder, it was possible to create without any authentication. Now as we have Kerberos authentication successfully implemented, lets attempt to create a directory.

hadoop fs -mkdir /usr/local/kerberos2

You can try running the above command and it will fail to create the directory.

4. Conclusion

In this tutorial we started with an introductiong to kerberos and how it is used for adding security to the Hadoop clusters. Basic theoritical concepts of kerberos were discussed followed by the installation steps of client and server components and their configuration. Finally we configured Hadoop to use the kerberos authentication and setup SSH to allow communication.

I hope this is a clear introduction to the Kerberos authentication and its use in Apache Hadoop.

It was really simple and easy to understand. But you have covered only 1) under 3.1. Would be complete and helpful if can cover other bullets as well.

kdb5_util: file exists while creating database ‘/var/kerberos/krb5kdc/principal’ — I am getting this problem what should I do