Lucene Analyzer Example

In this example, we are going to learn about Lucene Analyzer class. Here, we will go through the simple and fundamental concepts with the Analyzer Class. Previously, we have already gone through some of basic and implementation concepts with StandardAnalyzer Class with our indexing example. Here, we will go through the usage and description of main Analyzer Class provided in Lucene.

Thus, this post aims to demonstrate you with different analyzing options and features that lucence facilitates through use of the Analyzer class from lucene.

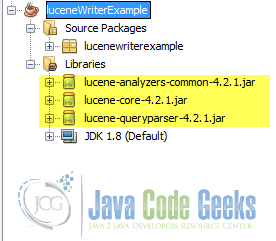

The code in this example is developed in the NetBeans IDE 8.0.2. In this example, the lucene used is lucene version 4.2.1. You would better try this one with the latest versions always.

1. Analyzer Class

Analyzer Class is the basic Class defined in Lucene Core particularly specialized for direct use for parsing queries and maintaining the queries. Different methods are available in the Analyzer Class so that we can easily go with the analyzing tasks using a wide range of analyzer options provided by the Lucene.

Analyzer is something like policy to extract index terms from the token-able text.So, this can interpret with different sorts of text value and built a TokenStreams for that.So, the queryString as an input from us or a stored data is analyzed through extracting of index term from them using the preferred policy of Analyzer Class. Literally, it is the one to analyze the text. And this can be the prerequisite for indexing and searching process in Lucene. It is defined under org.apache.lucene.analysis as a abstract class.

public abstract class Analyzer extends Object implements Closeable

2. Usages Of Analyzer Class

2.1 Defining your own Class

You can create your own tokenizing class using the tokenStream method of the Analyzer Class.

TokenizewithAnalyzer.java

import java.io.IOException;

import java.io.StringReader;

import java.util.ArrayList;

import java.util.List;

import org.apache.lucene.analysis.Analyzer;

import org.apache.lucene.analysis.TokenStream;

import org.apache.lucene.analysis.tokenattributes.CharTermAttribute;

/**

*

* @author Niraj

*/

public final class TokenizewithAnalyzer {

private TokenizewithAnalyzer() {}

public static List tokenizeString(Analyzer analyzer, String str) {

List result = new ArrayList<>();

try {

TokenStream stream = analyzer.tokenStream(null, new StringReader(str));

stream.reset();

while (stream.incrementToken()) {

result.add(stream.getAttribute(CharTermAttribute.class).toString());

}

} catch (IOException e) {

// not thrown b/c we're using a string reader...

throw new RuntimeException(e);

}

return result;

}

}tester.java

String text = "Lucene is a simple yet powerful java based search library.";

Analyzer analyzer = new StandardAnalyzer(Version.LUCENE_42);

List ss=TokenizewithAnalyzer.tokenizeString(analyzer, text);

System.out.print("==>"+ss+" \n");

Output

run: ==>[lucene, simple, yet, powerful, java, based, search, library] BUILD SUCCESSFUL (total time: 1 second)

2.2 Common Analyzers

Some prevailing analyzers can be used to analyse the given text. Some of Common analyzers are :

- WhitespaceAnalyzer: Splits into tokens on whitespace.

- SimpleAnalyzer: Splits into tokens on non-letters, and then lowercases.

- StopAnalyzer: Also removes stop words too.

- StandardAnalyzer: Most sophisticated analyzer that consider general token types, lowercases, removes stop words and likewises

3. Analyzer examples

Let us consider the text to be analyzed is “The test email – mail@javacodegeeks.com”. The different results of token list while using the commom Analyzers are metioned below:

- WhitespaceAnalyzer: [The, test, email,- , mail@javacodegeeks.com]

- SimpleAnalyzer: [the, test, email, mail, javacodegeeks, com]

- StopAnalyzer: [test, email, mail, javacodegeeks, com]

- StandardAnalyzer: [test, email, mail@javacodegeeks.com]

4.Inside a Analyzer

Analyzers need to return a TokenStream. A TokenStream is handled with Tokenizer and TokenFilter.

public TokenStream tokenStream(String fieldName, Reader reader)

In order to define what analysis is done, subclasses can be defined with their TokenStreamComponents in createComponents(String).The components are then reused in each call to tokenStream(String, Reader).

Analyzer analyzer = new Analyzer() {

@Override

protected TokenStreamComponents createComponents(String fieldName) {

Tokenizer source = new FooTokenizer(reader);

TokenStream filter = new FooFilter(source);

filter = new BarFilter(filter);

return new TokenStreamComponents(source, filter);

}

};

You can look into analysis modules of Analysis package documentation for some of concrete implementations bundled with Lucene.

- Common: Analyzers for indexing content in different languages and domains.

- ICU: Exposes functionality from ICU to Apache Lucene.

- Kuromoji: Morphological analyzer for Japanese text.

- Morfologik: Dictionary-driven lemmatization for the Polish language.

- Phonetic: Analysis for indexing phonetic signatures (for sounds-alike search).

- Smart Chinese: Analyzer for Simplified Chinese, which indexes words.

- Stempel: Algorithmic Stemmer for the Polish Language.

- UIMA: Analysis integration with Apache UIMA.

5.Constructors and Methods

5.1 Fields

public static final Analyzer.ReuseStrategy GLOBAL_REUSE_STRATEGY: A predefined Analyzer.ReuseStrategy that reuses the same components for every field.public static final Analyzer.ReuseStrategy PER_FIELD_REUSE_STRATEGY: A predefined Analyzer.ReuseStrategy that reuses components per-field by maintaining a Map of TokenStreamComponent per field name.

5.2 Constructors

public Analyzer(): Constructor reusing the same set of components per-thread across calls to tokenStream(String, Reader).public Analyzer(Analyzer.ReuseStrategy reuseStrategy): Constructor with a custom Analyzer.ReuseStrategy

NOTE: if you just want to reuse on a per-field basis, it’s easier to use a subclass of AnalyzerWrapper such as PerFieldAnalyerWrapper instead.

5.3 Some major methods

Some of the major methods of Analyzer class are listed below:

protected abstract Analyzer.TokenStreamComponents createComponents(String fieldName): Creates a new Analyzer.TokenStreamComponents instance for this analyzer.TokenStream tokenStream(String fieldName, Reader reader): Returns a TokenStream suitable for fieldName, tokenizing the contents of text.TokenStream tokenStream(String fieldName, String text): Generate ParseException.int getPositionIncrementGap(String fieldName): Invoked before indexing a IndexableField instance if terms have already been added to that field.Analyzer.ReuseStrategy getReuseStrategy(): Returns the used Analyzer.ReuseStrategy.protected Reader initReader(String fieldName, Reader reader): Override this if you want to add a CharFilter chain.void setVersion(Version v): Sets the version of Lucene this analyzer.

6. Things to consider

- StandardAnalyzer is the most sophisticated analyzer that consider general token types, lowercases, removes stop words and likewises.

- In order to define what analysis is done, subclasses can be defined with their TokenStreamComponents in

createComponents(String).The components are then reused in each call totokenStream(String, Reader). - You need to include both jar files of

lucene-analyzers-common-x.x.xandlucene-queryparser-x.x.xalong with lucene-core jar files to go with above examples.

7. Download the source code

You can download the full source code of the example here: Lucene Example Code