Lucene Indexing Example

In this Example , we are going to learn about Lucene Indexing. We went through three of the important classes to go with for the Indexing process in the previous three examples. Here, we go through the fundamental concepts behind the whole Indexing process.Thus, this post aims to demonstrate you with Indexing Approach in Lucence as well as options and features that lucence facilitates through use of the important classes from lucene.

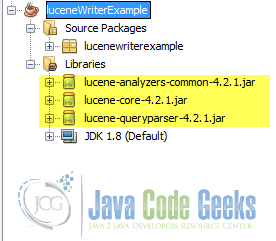

The code in this example is developed in the NetBeans IDE 8.0.2. In this example, the lucene used is lucene version 4.2.1. You would better try this one with the latest versions always.

1. Indexing Process

Indexing literally refers the process of classifying by providing a system of indexes so as to make items easier to access, retrieve or search from the whole source of information. This is like why we create table of index or table of contents in our books.

Apache Lucene is an open-source search-support project recently working under Lucene core , solr , pyLucene and open revelence project. Talking about Lucence core, it is particularly aimed to provide Java-based indexing and search technology, as well as spellchecking, hit highlighting and advanced analysis/tokenization capabilities.

The prime key for indexing and search technology in lucene is to go with indexing using index directory. So, Lucene Indexing is the prime intentional objective of Lucene core.

Lucene Indexing processes the input data or document as per the nature of the fields defined in Document Object. Different fields are defined into the document object. The document containing the fields are analysed using a StandardAnalyser. The IndexWriter indexes the each document data using the configuration of IndexWriterConfig into the index directory.

Note: You need to import both jar files of lucene-analyzers-common-x.x.x and lucene-queryparser-x.x.x along with lucene-core-x.x.x jar file to implement this Lucene Example.

2. Major Classes

We went through three of the important classes to go with for the Indexing process in the previous three examples. The primary one was the IndexWriter. Next were QueryParser and StandardAnalyzer.

In the IndexWriter post, we went through the indexing, writing, searching and displaying steps for the indexing example.The QueryParser post was aimed to demonstrate different searching options and features that lucence facilitates through use the QueryParser class from lucene. Finally, the StandardAnalyser post was aimed to demonstrate implementation contexts for the Standard Analyzer class in lucene.

2.1. IndexWriter Class

IndexWriter Class is the basic Class defined in Lucene Core particularly specialized for direct use for creating index and maintaining the index.Different methods are available in the IndexWriter Class so that we can easily go with the indexing tasks.

Usage

Directory index = new RAMDirectory();

//Directory index = FSDirectory.open(new File("index-dir"));

IndexWriterConfig config = new IndexWriterConfig(Version.LUCENE_42, analyzer);

IndexWriter writer = new IndexWriter(index, config);

Note: You need to import “lucene-core-4.2.1.jar” to use IndexWriter.

2.2. QueryParser Class

QueryParser Class is the basic Class defined in Lucene Core particularly specialized for direct use for parsing queries and maintaining the queries. Different methods are available in the QueryParser Class so that we can easily go with the searching tasks using a wide range of searching options provided by the Lucene.

QueryParser is almost like a lexer that can interpret any sort of valid QueryString into a Lucence query. So, the queryString as an input from us is interpreted as the query command that the lucence is meant to understand and execute the command. It is the vital part of Lucence. As it is a lexer , it is to deal with grammar. And for grammar, query language or query syntax is the main thing to issue with.

Usage

Query q = new QueryParser(Version.LUCENE_42, "title", analyzer).parse(querystr);

Note: You need to import “lucene-queryparser-common-4.2.1.jar” to use QueryParser.

2.3. StandardAnalyzer Class

StandardAnalyzer Class is the basic class defined in Lucene Analyzer library. It is particularly specialized for toggling StandardTokenizer with StandardFilter, LowerCaseFilter and StopFilter, using a list of English stop words.This analyzer is the more sofisticated one as it can go for handling fields like email address, names, numbers etc.

Usage

StandardAnalyzer analyzer = new StandardAnalyzer(Version.LUCENE_42);

Note: You need to need to import “lucene-analyzers-common-4.2.1.jar” to use StandardAnalyzer.

3. Create a Document object

We need to create a document with required fields. The following steps need to be considered for creating a document.

- Extracting data from targeted source (text file or any document file)

- Conceptualize the key-value pair (hierarchy) for various fields in the document.

- Decide if the fields need to be analyzed or not. Conceptualize the concept for easy searching (what is needed and what can be avoided).

- Create the document object adding those fields.

4. Create a IndexWriter

IndexWriter class is the primary class to use during indexing process.The following steps need to be considered for creating a IndexWriter.

- Create a directory object which should point to location where indexes are to be stored.

- Create a IndexWriter Object.

- Initialize the object with the index directory, a standard analyzer having version information and other required/optional parameters.

5. A Start with IndexWriter

For a indexing process, we should create at least one IndexWriter Object.To go with the IndexWriter object, we can use a StandardAnalyazer Instance having version information and other required/optional parameters to initialize the IndexWriter Object.

Note: You need to import “lucene-analyzers-common-4.2.1.jar” to use StandardAnalyzer.

Initializing StandardAnalyzer

StandardAnalyzer analyzer = new StandardAnalyzer(Version.LUCENE_42); //creates an StandardAnalyzer object

5.1. Indexing

You can create an index Directory and configure it with the analyzer instance. You can also give the file path to assign as index directory (Must in case of larger data scenario).

Initializing IndexWriter

Directory index = new RAMDirectory();

//Directory index = FSDirectory.open(new File("index-dir"));

IndexWriterConfig config = new IndexWriterConfig(Version.LUCENE_42, analyzer);

IndexWriter writer = new IndexWriter(index, config);

Then you can create a writer object using the index directory and IndexWriterConfig objects. For good programming practices , never forget to close the writer upon completion of writer task. This completes the indexing process.

5.2. Adding Fields to the document Object, setting fields and writing to it

Creating a Document Object

Document doc = new Document();

doc.add(new TextField("title", title, Field.Store.YES));

doc.add(new StringField("course_code", courseCode, Field.Store.YES));

w.addDocument(doc);Instead of lengthly process of adding each new entry, we can create a generic fuction to add the new entry doc . We can add needed fields with field variable and respective tag.

addDoc Function

private static void addDoc(IndexWriter w, String title, String courseCode) throws IOException {

Document doc = new Document();

doc.add(new TextField("title", title, Field.Store.YES));

// Here, we use a string field for course_code to avoid tokenizing.

doc.add(new StringField("course_code", courseCode, Field.Store.YES));

w.addDocument(doc);

}Now the witer object can use addDoc Function to write our data or entries.

Writing to index

addDoc(writer, "Day first : Lucence Introduction.", "3436NRX"); addDoc(writer, "Day second , part one : Lucence Projects.", "3437RJ1"); addDoc(writer, "Day second , part two: Lucence Uses.", "3437RJ2"); addDoc(writer, "Day third : Lucence Demos.", "34338KRX"); writer.close();

5.3. Quering

Second task with the example is going with a query string for our seraching task. For quering we use Query parser for our query string using the same analyzer. Nextly, we create indexreader and index searcher for our index directory using a index searcher object. Finally, we collect the search results using TopScoreDocCollector into the array of ScoreDoc. The same array can be used to display the results to user with a proper user interface as needed.

Creating QueryString

String querystr = "Second"; Query q = new QueryParser(Version.LUCENE_42, "title", analyzer).parse(querystr);

5.4. Searching

As we are done with indexing, we can obviously go with Searching.

int hitsPerPage = 10; IndexReader reader = DirectoryReader.open(index); IndexSearcher searcher = new IndexSearcher(reader); TopScoreDocCollector collector = TopScoreDocCollector.create(hitsPerPage, true); searcher.search(q, collector); ScoreDoc[] hits = collector.topDocs().scoreDocs;

5.5. Displaying results

Finally, the search results are needed to be displayed.

Displaying results

System.out.println("Query string: " + querystr );

System.out.println("Found " + hits.length + " hits.");

for (int i = 0; i < hits.length; ++i) {

int docId = hits[i].doc;

Document d = searcher.doc(docId);

System.out.println((i + 1) + ". " + d.get("course_code") + "\t" + d.get("title"));

}// Finally , close readerFinally we completed a simple demonstration with this example.

6. Things to consider

- Always remember to close IndexWriter. Cause: Leaving the IndexWriter Open still implies that recently added documents are not commited or indexed into the index folder.

- Not Analyzed : is not broken down into individual tokens. It should match exactly with query string.

- You need to include both jar files of

lucene-analyzers-common-x.x.xandlucene-queryparser-x.x.xalong withlucene-core-x.x.xjar file to go with above examples. - You must specify the required Version compatibility when creating

StandardAnalyzer. - This should be a good tokenizer for most European-language documents.

- If this tokenizer does not suit your scenarios, you would better consider copying this source code directory to your project and maintaining your own grammar-based tokenizer.

7. Download the Netbeans project

You can download the full source code of the example here: Lucene Example Code

how to run this project?