Understanding Kafka Topics and Partitions

Apache Kafka is an open-source stream-processing platform designed for handling real-time data feeds. It operates on a publish-subscribe model, allowing seamless communication between producers who publish data to topics and consumers who subscribe to these topics. Kafka uses topics, partitions, and replication to manage data organization, parallel processing, and fault tolerance. It enables high-throughput, fault-tolerant, and scalable data streaming, making it ideal for various applications such as log aggregation, event sourcing, real-time analytics, and messaging systems. Kafka’s ability to process and store massive volumes of data in real time has made it a fundamental tool in modern data architectures and stream processing workflows.

1. Introduction

Apache Kafka is an open-source stream-processing platform developed by the Apache Software Foundation. It is designed to handle real-time data feeds efficiently and in a fault-tolerant manner. Kafka’s architecture is based on a publish-subscribe model, where data producers publish messages to topics, and consumers subscribe to these topics to receive the data.

1.1 Key Features of Kafka

- Scalability: Kafka is highly scalable, allowing it to handle large volumes of data and high-throughput workloads.

- Fault Tolerance: Kafka ensures fault tolerance by replicating data across multiple brokers. If one broker fails, data remains accessible from its replicas.

- Real-time Processing: Kafka supports real-time processing of streaming data, making it ideal for applications requiring low latency.

- Partitioning: Data in Kafka topics is partitioned, enabling parallel processing and efficient utilization of resources.

- Durability: Kafka provides durable storage of messages, ensuring that data is not lost even if a consumer fails to process it immediately.

1.2 Pros of Using Apache Kafka

- High Throughput: Kafka can handle millions of events per second, making it suitable for applications with demanding throughput requirements.

- Scalability: Kafka scales horizontally, allowing you to add more brokers to the cluster to handle increased loads.

- Reliability: Kafka offers strong durability guarantees, ensuring that data is not lost even in the face of hardware failures.

- Real-time Analytics: Kafka enables real-time data processing, making it valuable for analytics, monitoring, and dashboard applications.

- Flexibility: Kafka integrates seamlessly with various data processing frameworks and can be used in diverse use cases, including log aggregation, event sourcing, and more.

1.3 Cons of Using Apache Kafka

- Complexity: Setting up and configuring a Kafka cluster can be complex, requiring careful consideration of various parameters.

- Learning Curve: Users new to Kafka might face a steep learning curve due to its extensive features and configuration options.

- Operational Overhead: Managing and monitoring Kafka clusters requires significant operational effort and expertise.

- Resource Intensive: Kafka can be resource-intensive, especially in large-scale deployments, requiring substantial hardware resources.

2. Understanding Kafka Topics, Partitions, and Consumer Groups

In Apache Kafka, topics, partitions, and consumer groups are fundamental concepts that enable the efficient processing and distribution of data streams within the Kafka ecosystem.

2.1 Kafka Topic

A topic in Kafka is a category or feed name to which records (messages) are published. Producers write data to topics, and consumers read data from topics. Topics allow the categorization of data streams, facilitating multiple consumers to subscribe and process specific subsets of the data. Each message in Kafka includes a key, a value, and a timestamp.

- Topics categorize the stream of records.

- Producers publish messages to topics.

- Consumers subscribe to topics to read messages.

2.2 Kafka Partition

A partition is a part of a Kafka topic. Topics can be divided into multiple partitions, allowing data to be parallelized and distributed across different servers (brokers) in a Kafka cluster. Each partition represents an ordered, immutable sequence of messages. Partitions enable Kafka to handle high throughput because multiple consumers can read from different partitions concurrently.

- Partitions allow parallel processing of data.

- Each Partition represents an ordered, immutable sequence of messages.

- Partitions enable horizontal scaling and fault tolerance.

2.3 Kafka Consumer Groups

A consumer group is a collection of consumers that work together to consume and process data from one or more topics. When multiple consumers belong to the same consumer group, each consumer reads data from a different partition. This parallelizes the consumption process, ensuring efficient processing of large volumes of data.

- Consumers within a Group work together to consume data.

- Each Consumer in a group reads from a different partition.

- Consumer Groups enable parallel processing and load balancing.

2.4 Use Cases and Benefits

- Scalability: Consumer groups enable horizontal scaling, allowing the addition of more consumers to handle increasing data volumes.

- Fault Tolerance: If a consumer fails, its partitions can be reassigned to other consumers, ensuring continuous data processing.

- Parallel Processing: Consumer groups allow for parallel data processing, improving overall throughput.

- Load Balancing: Kafka automatically balances partitions across consumers, ensuring an even workload distribution.

3. Setting up Apache Kafka on Docker

Docker is an open-source platform that enables containerization, allowing you to package applications and their dependencies into standardized units called containers. These containers are lightweight, isolated, and portable, providing consistent environments for running applications across different systems. Docker simplifies software deployment by eliminating compatibility issues and dependency conflicts. It promotes scalability, efficient resource utilization, and faster application development and deployment. With Docker, you can easily build, share, and deploy applications in a consistent and reproducible manner, making it a popular choice for modern software development and deployment workflows. If someone needs to go through the Docker installation, please watch this video.

Using Docker Compose simplifies the process by defining the services, their dependencies, and network configuration in a single file. It allows for easier management and scalability of the environment. Make sure you have Docker and Docker Compose installed on your system before proceeding with these steps. To set up Apache Kafka on Docker using Docker Compose, follow these steps.

3.1 Creating Docker Compose file

Create a file called docker-compose.yml and open it for editing. Add the following content to the file and save it once done.

docker-compose.yml

version: '3'

services:

zookeeper:

image: confluentinc/cp-zookeeper:latest

container_name: zookeeper

ports:

- "2181:2181"

networks:

- kafka-network

kafka:

image: confluentinc/cp-kafka:latest

container_name: kafka

depends_on:

- zookeeper

ports:

- "9092:9092"

environment:

KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://localhost:9092

KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 1

networks:

- kafka-network

networks:

kafka-network:

driver: bridge

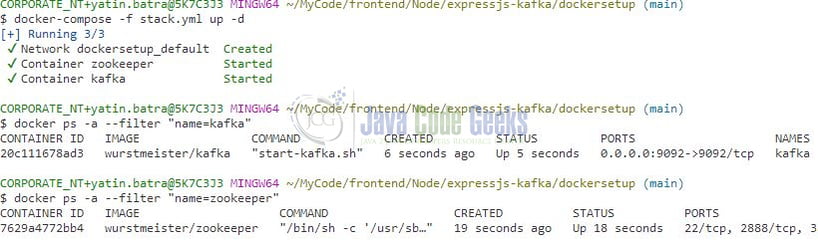

3.2 Running the Kafka containers

Open a terminal or command prompt in the same directory as the docker-compose.yml file. Start the Kafka containers by running the following command:

Start containers

docker-compose up -d

This command will start the ZooKeeper and Kafka containers in detached mode, running in the background.

To stop and remove the containers, as well as the network created, use the following command:

Stop containers

docker-compose down

4. Apache Kafka in Spring Boot

Let us dive into some practice stuff. To get started with Kafka using Spring Boot, you can follow these steps.

4.1 Creating a Spring Boot Project

Set up a new Spring Boot project or use an existing one. Include the necessary dependencies in your project’s pom.xml file.

pom.xml

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

</dependency>

4.2 Configure Kafka properties

In your project’s application.properties file, configure the Kafka connection and topic properties.

| Property | Value | Description |

|---|---|---|

| Kafka connection details | spring.kafka.bootstrap-servers=localhost:9092 | This property specifies the bootstrap servers for connecting to Kafka. In this case, it is set to “localhost:9092”. The bootstrap servers are responsible for initial connection establishment and discovering other brokers in the Kafka cluster. |

| Kafka consumer group ID | spring.kafka.consumer.group-id=my-group | This property defines the consumer group ID for a Kafka consumer. Consumer groups allow parallel processing of messages by dividing the workload among multiple consumers. In this case, the consumer group ID is set to “my-group”. |

| Kafka topic details | spring.kafka.topic-name=my-topic | This property specifies the name of the Kafka topic to which the application will publish or consume messages. In this case, the topic name is set to “my-topic”. |

| Kafka topic partitions | spring.kafka.topic-partitions=3 | This property defines the number of partitions for a Kafka topic. Partitions enable parallel processing and scalability within a topic. In this case, the topic is configured to have 3 partitions. |

| Kafka topic replication factor | spring.kafka.topic-replication-factor=1 | This property specifies the replication factor for a Kafka topic. Replication ensures fault tolerance and data availability by creating copies of each partition on multiple brokers. In this case, the topic has a replication factor of 1, meaning each partition has only one replica. |

application.properties

# Kafka connection details spring.kafka.bootstrap-servers=localhost:9092 spring.kafka.consumer.group-id=my-group # Kafka topic details spring.kafka.topic-name=my-topic spring.kafka.topic-partitions=3 spring.kafka.topic-replication-factor=1

4.3 Creating Kafka topic

To create Kafka topics programmatically in a Spring Boot application on startup, you can utilize the KafkaAdmin bean provided by Spring Kafka.

- The

KafkaTopicConfigclass creates aKafkaAdminbean for configuring theKafkaAdminClient, and aNewTopicbean using the provided topic name, number of partitions, and replication factor. - During application startup, Spring Boot will automatically initialize the

KafkaAdminbean and invoke themyTopic()method to create the specified topic. - In your producer and consumer code, you can refer to the topic name configured in

application.propertiesusing the@Valueannotation. - You can then use the

topicNamevariable to produce or consume messages from the dynamically created topic.

KafkaTopicConfig.java

package org.jcg.config; import org.apache.kafka.clients.admin.NewTopic; import org.springframework.beans.factory.annotation.Value; import org.springframework.context.annotation.Bean; import org.springframework.context.annotation.Configuration; import org.springframework.kafka.config.TopicBuilder; import org.springframework.kafka.core.KafkaAdmin;

// Class represents in the bean definitions @Configuration public class KafkaTopicConfig { @Value("${spring.kafka.topic-name}") private String topicName; @Value("${spring.kafka.topic-partitions}") private int numPartitions; @Value("${spring.kafka.topic-replication-factor}") private short replicationFactor; @Bean public KafkaAdmin kafkaAdmin() { return new KafkaAdmin(Map.of( AdminClientConfig.BOOTSTRAP_SERVERS_CONFIG, "localhost:9092" )); } @Bean public NewTopic myTopic() { return TopicBuilder.name(topicName) .partitions(numPartitions) .replicas(replicationFactor) .build(); } }

With this setup, the Kafka topic will be created automatically when your Spring Boot application starts. Make sure you have a running Kafka cluster reachable at the configured bootstrap servers.

4.4 Producing Messages

In this example, the KafkaProducer class is annotated with @Component to be automatically managed by Spring. It injects the KafkaTemplate bean for interacting with Kafka and uses the @Value annotation to retrieve the topic name from application.properties.

KafkaProducer.java

package org.jcg.producer;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.stereotype.Component;

@Component

public class KafkaProducer {

@Autowired

private KafkaTemplate<String, String> kafkaTemplate;

@Value("${spring.kafka.topic-name}")

private String topicName;

public void sendMessage(String message) {

kafkaTemplate.send(topicName, message);

System.out.println("Message sent: " + message);

}

}

To send a message using the Kafka producer, you can call the sendMessage method, passing the message content as a parameter. The producer will use the configured topic name to send the message to the Kafka topic.

Remember to configure the Kafka connection properties and topic name correctly in your application.properties file for this example to work properly.

4.5 Consuming Messages

In this example, the KafkaConsumer class is annotated with @Component to be automatically managed by Spring. It uses the @Value annotation to retrieve the topic name from application.properties.

KafkaConsumer.java

package org.jcg.consumer;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.kafka.annotation.KafkaListener;

import org.springframework.stereotype.Component;

@Component

public class KafkaConsumer {

@Value("${spring.kafka.topic-name}")

private String topicName;

@KafkaListener(topics = topicName)

public void receiveMessage(String message) {

System.out.println("Received message: " + message);

// Perform further processing of the received message

}

}

The receiveMessage method is annotated with @KafkaListener, specifying the topic name using the topic attribute in the annotation. When a new message is received from the Kafka topic, the receiveMessage method is invoked, and the message content is printed to the console. You can perform further processing of the received message as per your requirements.

Ensure that the Kafka connection properties and topic name are correctly configured in your application.properties file for this example to work correctly.

4.6 Create REST API to Send message

Create a controller package, and within the controller package, create KafkaProducerController with the following content. This controller will be responsible for sending a message to the Kafka topic with the help of the producer. The @RestController defines the spring controller while the @RequestMapping annotation will map the incoming HTTP requests to the handler methods given in this controller. The @GetMapping is used for mapping HTTP GET requests to the publish method at the path /api/v1/kafka/publish.

KafkaProducerController.java

package org.jcg.controller;

import org.jcg.producer.KafkaProducer;

import org.springframework.http.ResponseEntity;

import org.springframework.web.bind.annotation.*;

import org.springframework.beans.factory.annotation.Autowired;

@RestController

@RequestMapping("/api/v1/kafka")

public class KafkaProducerController {

@Autowired // Automatic injection of bean

private KafkaProducer kafkaProducer;

@GetMapping("/publish")

public ResponseEntity<String> publish(@RequestParam("message") String message){

kafkaProducer.sendMessage(message);

return ResponseEntity.ok("Message sent to Kafka topic");

}

}

4.7 Create Main class

In this main class, the @SpringBootApplication annotation is used to indicate that this is the main entry point for a Spring Boot application. It enables auto-configuration and component scanning.

The main() method starts the Spring Boot application by calling SpringApplication.run() and passing the main class (Application.class) along with any command-line arguments (args).

By running this main class, the Spring Boot application will start, and the controller class (KafkaProducerController) will be available to handle requests on the specified endpoints.

Application.java

package org.jcg;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

@SpringBootApplication

public class Application {

public static void main(String[] args) {

SpringApplication.run(Application.class, args);

}

}

5. Output

Here’s a sample output to demonstrate how the KafkaProducer and KafkaConsumer classes work together. Open a browser and hit the below link to call a REST API:

http://localhost:8080/api/v1/kafka/publish

The KafkaProducer class sends a message “Hello, Kafka!” to the Kafka topic.

Sample Output – KafkaProducer

Message sent: Hello, Kafka!

The KafkaConsumer class listens to the same Kafka topic and receives the message.

Sample Output – KafkaConsumer

Received message: Hello, Kafka!

In the sample output, you can see that the producer successfully sends the message, and the consumer receives and prints it to the console.

6. Conclusion

In conclusion, Apache Kafka is a powerful messaging system that provides a scalable, fault-tolerant, and high-performance solution for handling real-time data streams. When combined with Spring Boot, it offers seamless integration and enables developers to build robust and efficient distributed applications.

Using Apache Kafka in a Spring Boot application brings several benefits. It provides reliable message queuing and delivery guarantees, ensuring that messages are processed in a distributed and fault-tolerant manner. Kafka’s distributed architecture allows for high throughput and low-latency data processing, making it suitable for use cases that require real-time data streaming and event-driven architectures.

7. Download the Project

This was a tutorial to understand the Kafka topics and partitions with a simple spring boot application.

You can download the full source code of this example here: Understanding Kafka Topics and Partitions