Rename Files and Folders in Amazon S3 Using Spring

Amazon S3 (Simple Storage Service) is a scalable object storage service offered by Amazon Web Services. It allows users to store and retrieve any amount of data from anywhere on the web. Renaming objects in S3 involves copying the object with a new name and deleting the original, ensuring seamless management and organization of stored data. Let us explore Rename Files and Folders in Amazon S3 Using the Spring Boot application.

1. Introduction

Amazon Web Services (AWS) is a leading cloud computing platform provided by Amazon. It offers a wide range of services, including computing power, storage, databases, machine learning, analytics, and more, enabling businesses to build and deploy scalable and secure applications.

Amazon S3, or Simple Storage Service, is an object storage service that offers scalable storage for a variety of data types. It allows users to store and retrieve any amount of data from anywhere on the web.

1.1 Key Features of Amazon S3

- Scalability: S3 can handle an infinite amount of data and traffic, making it suitable for businesses of any size.

- Durability and Reliability: S3 ensures 99.999999999% (11 9’s) durability of objects over a given year.

- Security: S3 offers robust data security features, including encryption, access control, and multi-factor authentication.

- Versioning: Users can preserve, retrieve, and restore every version of every object in their bucket.

- Flexible Storage Classes: S3 offers various storage classes, including Standard, Intelligent-Tiering, Glacier, and Deep Archive, allowing cost optimization based on usage.

- Highly Available: S3 replicates data across multiple geographically dispersed data centers, ensuring high availability and fault tolerance.

- Easy Management: S3 provides a user-friendly management console and APIs for easy organization, retrieval, and manipulation of data.

1.2 Benefits of Amazon S3

Amazon S3 revolutionizes data storage and retrieval, providing businesses with unparalleled flexibility, security, and cost-efficiency. Its seamless integration with other AWS services makes it an ideal choice for developers, startups, and enterprises seeking reliable and scalable storage solutions.

2. Working Example

To work with S3 programmatically, let’s begin by understanding the prerequisites. First and foremost, you’ll need an AWS account, which can be set up by registering through this link. Once your account is active, create an IAM user with full S3 access privileges and enable CLI credentials. Additionally, prepare two S3 buckets to proceed with the example (Ensuring that both buckets are located within the same region.).

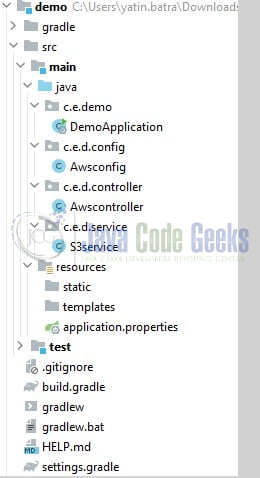

Let’s now explore some practical aspects. For those interested in examining the project structure, please refer to the image below.

2.1 Updating dependencies in build.gradle File

Set up a new Spring Boot project or use an existing one. Include the necessary dependencies in your project’s build.gradle file.

build.gradle

plugins {

id 'java'

id 'org.springframework.boot' version '3.1.5'

id 'io.spring.dependency-management' version '1.1.3'

}

group = 'com.example'

version = '0.0.1-SNAPSHOT'

java {

sourceCompatibility = '17'

}

repositories {

mavenCentral()

}

dependencies {

implementation 'org.springframework.boot:spring-boot-starter-web'

implementation 'com.amazonaws:aws-java-sdk:1.12.571'

testImplementation 'org.springframework.boot:spring-boot-starter-test'

}

tasks.named('test') {

useJUnitPlatform()

}

2.2 Configure Application Properties File

Specify the following properties in the application.properties file –

server.port=9030:Specifies that the application will run on port 9030.spring.application.name=springboot-aws-s3:Sets the name of the Spring Boot application as “springboot-aws-s3.”cloud.aws.region.static=YOUR_AWS_BUCKET_REGION:Specifies the AWS region where your S3 bucket is located.cloud.aws.region.credentials.access-key=YOUR_AWS_ACCESS_KEY:Specifies the AWS access key for authentication.cloud.aws.region.credentials.secret-key=YOUR_AWS_SECRET_KEY:Specifies the corresponding secret key associated with the access key.source.bucket-name=YOUR_AWS_SOURCE_BUCKET:Specifies the name of the source S3 bucket that the application will interact with.

application.properties

### spring configuration server.port=9030 spring.application.name=springboot-aws-s3 ### aws configuration

# iam cloud.aws.region.static=YOUR_AWS_BUCKET_REGION cloud.aws.region.credentials.access-key=YOUR_AWS_ACCESS_KEY cloud.aws.region.credentials.secret-key=YOUR_AWS_SECRET_KEY

# s3 source.bucket-name=YOUR_AWS_SOURCE_BUCKET

2.3 Configure AWS Configuration Class

The given class is a Spring configuration class named Awsconfig. It is annotated with @Configuration, indicating that it contains bean definitions and should be processed by the Spring container during component scanning. Inside this class, the AWS access key, secret key, and region are obtained from properties files using the @Value annotation, which injects values from the specified properties.

The @Bean annotation marks the s3() method, designating it as a bean to be managed by the Spring container. Within this method, an instance of AWSCredentials is created using the retrieved access key and secret key. Then, an AWSStaticCredentialsProvider is initialized with these credentials.

The method further configures an AmazonS3 client using AmazonS3ClientBuilder. It sets the credentials provider to the one created earlier, and the AWS region is specified. The resulting AmazonS3 client is then built and returned as a managed bean. This configuration is essential for establishing a connection with AWS S3 services, allowing the application to interact with the specified S3 bucket securely.

Awsconfig.java

package com.example.demo.config;

import com.amazonaws.auth.AWSCredentials;

import com.amazonaws.auth.AWSStaticCredentialsProvider;

import com.amazonaws.auth.BasicAWSCredentials;

import com.amazonaws.services.s3.AmazonS3;

import com.amazonaws.services.s3.AmazonS3ClientBuilder;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

@Configuration

public class Awsconfig {

@Value("${cloud.aws.region.credentials.access-key}")

private String key;

@Value("${cloud.aws.region.credentials.secret-key}")

private String secret;

@Value("${cloud.aws.region.static}")

private String region;

@Bean

public AmazonS3 s3() {

AWSCredentials credentials = new BasicAWSCredentials(key, secret);

AWSStaticCredentialsProvider provider = new AWSStaticCredentialsProvider(credentials);

return AmazonS3ClientBuilder.standard()

.withCredentials(provider)

.withRegion(region)

.build();

}

}

2.4 Create a Service Class

The S3service class is annotated with @Service, indicating that it’s a Spring service component. It provides methods for managing files within Amazon S3 buckets. The class encapsulates interactions with Amazon S3, utilizing the AmazonS3 client provided by the AWS SDK. Below are the key components of the class explained in detail.

- The class has a constructor that injects an instance of

AmazonS3through Spring’s dependency injection. This ensures that the class can interact with the Amazon S3 service. listFiles(String bkt): This method lists files in the specified bucket. If the input bucket name is empty or null, it uses the default bucket defined in thebucketfield.moveAndDeleteKeyFromSource(String sKey, String dBucket): Moves a specific file from the source bucket to a specified destination bucket. It also generates a dynamic name for the destination file based on the current timestamp.moveAllKeysToDestination(String dBucket): Moves all files from the source bucket to the specified destination bucket. It generates dynamic names for the destination files using the current timestamp.moveKeyToDestination(String sKey, String dKey, String dBucket): Moves a specific file from the source bucket to the specified destination bucket. It allows renaming the file in the destination bucket.getKeys(String bucketName): A private method that retrieves a list ofS3ObjectSummaryobjects representing files in the specified bucket.getDynamicKeyName(String key): A private method that generates a dynamic name for a file using the current timestamp.copyAndDeleteOp(String sKey, String dKey, String dBucket): A private method that performs the copy operation from the source bucket to the destination bucket and deletes the file from the source bucket.

S3service.java

package com.example.demo.service;

import com.amazonaws.services.s3.AmazonS3;

import com.amazonaws.services.s3.model.S3ObjectSummary;

import com.amazonaws.util.StringUtils;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.stereotype.Service;

import java.time.LocalDateTime;

import java.util.ArrayList;

import java.util.List;

import java.util.stream.Collectors;

@Service

public class S3service {

private final AmazonS3 s3;

@Value("${source.bucket-name}")

private String bucket;

@Autowired

public S3service(AmazonS3 amazonS3) {

this.s3 = amazonS3;

}

public List<String> listFiles(String bkt) {

// if method input is empty or null use default bucket else input

String bucketName = StringUtils.isNullOrEmpty(bkt) ? bucket : bkt;

return getKeys(bucketName).stream()

.map(S3ObjectSummary::getKey)

.collect(Collectors.toList());

}

public String moveAndDeleteKeyFromSource(String sKey, String dBucket) {

String dKey = getDynamicKeyName(sKey);

copyAndDeleteOp(sKey, dKey, dBucket);

return "done";

}

public List<String> moveAllKeysToDestination(String dBucket) {

List<String> names = new ArrayList<>();

// get keys from source bucket

for (S3ObjectSummary objectSummary : getKeys(bucket)) {

String sKey = objectSummary.getKey();

String dKey = getDynamicKeyName(sKey);

copyAndDeleteOp(sKey, dKey, dBucket);

names.add(sKey);

}

return names;

}

public String moveKeyToDestination(String sKey, String dKey, String dBucket) {

// get keys from source bucket

for (S3ObjectSummary objectSummary : getKeys(bucket)) {

// filter given key

if (objectSummary.getKey().equals(sKey)) {

copyAndDeleteOp(sKey, dKey, dBucket);

}

}

return "renamed.";

}

// private methods.

private List<S3ObjectSummary> getKeys(String bucketName) {

return s3.listObjectsV2(bucketName).getObjectSummaries();

}

private String getDynamicKeyName(String key) {

return LocalDateTime.now() + "_" + key;

}

private void copyAndDeleteOp(String sKey, String dKey, String dBucket) {

// copy key from source bucket from destination bucket

s3.copyObject(bucket, sKey, dBucket, dKey);

// delete object from source bucket

s3.deleteObject(bucket, sKey);

}

}

2.5 Create a Controller Class

The Awscontroller class is annotated with @RestController, indicating that it’s a Spring REST controller. It exposes several endpoints for performing operations on Amazon S3 buckets. The class relies on the S3service for actual S3 interactions.

The class has a constructor that injects an instance of S3service using Spring’s dependency injection.

The Awscontroller class defines several endpoints to perform different S3 operations. Let’s explore these endpoints:

- GET /api/list: This endpoint lists files in a specified S3 bucket. Clients can provide the bucket name as a query parameter.

- GET /api/moveAndDelete: This endpoint moves a specific file from the source bucket to a destination bucket and deletes it from the source bucket. Clients need to provide the source key and destination bucket as query parameters.

- GET /api/move-all: This endpoint moves all files from the source bucket to a specified destination bucket. Clients need to provide the destination bucket as a query parameter.

- GET /api/rename: This endpoint moves a specific file from the source bucket to a destination bucket with a new key name and deletes it from the source bucket. Clients need to provide the source key, destination key, and destination bucket as query parameters.

Awscontroller.java

package com.example.demo.controller;

import com.example.demo.service.S3service;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.http.HttpStatus;

import org.springframework.web.bind.annotation.*;

import java.util.List;

@RestController

@RequestMapping(value = "/api")

public class Awscontroller {

private final S3service service;

@Autowired

public Awscontroller(S3service s3service) {

this.service = s3service;

}

// curl 'http://localhost:9030/api/list?bucket=your_destination_bucket'

@GetMapping(value = "/list")

@ResponseStatus(HttpStatus.OK)

public List<String> getAllFiles(@RequestParam(name = "bucket", required = false) String bucket) {

return service.listFiles(bucket);

}

// curl 'http://localhost:9030/api/moveAndDelete?source-key=your_source_key&destination-bucket=your_destination_bucket'

@GetMapping(value = "/moveAndDelete")

@ResponseStatus(HttpStatus.OK)

public String moveAndDelete(@RequestParam("source-key") String sKey, @RequestParam("destination-bucket") String dBucket) {

// skipping key name validation

// skipping destination bucket validation

// destination bucket should be in same region as source bucket

return service.moveAndDeleteKeyFromSource(sKey, dBucket);

}

// curl 'http://localhost:9030/api/move-all?destination-bucket=your_destination_bucket'

@GetMapping(value = "/move-all")

@ResponseStatus(HttpStatus.OK)

public List<String> moveAll(@RequestParam("destination-bucket") String dBucket) {

// skipping destination bucket validation

// destination bucket should be in same region as source bucket

return service.moveAllKeysToDestination(dBucket);

}

// curl 'http://localhost:9030/api/moveAndDelete?source-key=your_source_key&destination-key=your_destination_key&destination-bucket=your_destination_bucket'

@GetMapping(value = "/rename")

@ResponseStatus(HttpStatus.OK)

public String rename(@RequestParam("source-key") String sKey,

@RequestParam("destination-key") String dKey,

@RequestParam("destination-bucket") String dBucket) {

// skipping key name validation

// skipping destination bucket validation

// destination bucket should be in same region as source bucket

return service.moveKeyToDestination(sKey, dKey, dBucket);

}

}

2.6 Create Main Class

In this main class, the @SpringBootApplication annotation is used to indicate that this is the main entry point for a Spring Boot application. It enables auto-configuration and component scanning.

The main() method starts the Spring Boot application by calling SpringApplication.run() and passing the main class DemoApplication.class along with any command-line arguments args.

DemoApplication.java

package com.example.demo;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

@SpringBootApplication

public class DemoApplication {

public static void main(String[] args) {

SpringApplication.run(DemoApplication.class, args);

}

}

3. Output

Start the Spring boot application by running the DemoApplication.java class from the IDE and open the Postman tool to import the cURL requests. When a cURL request like this is sent to a Spring Boot application endpoint, the Spring controller mapped to the specified URL will handle the incoming POST request.

cURL requests

-- To list the keys present in a bucket. -- bucket query parameter is optional. curl 'http://localhost:9030/api/list?bucket=your_destination_bucket' -- To move the key from the source bucket to the destination bucket. curl 'http://localhost:9030/api/moveAndDelete?source-key=your_source_key&destination-bucket=your_destination_bucket' -- To move all keys from the source bucket to the destination bucket. curl 'http://localhost:9030/api/move-all?destination-bucket=your_destination_bucket' -- To move a key from the source bucket to the destination bucket. curl 'http://localhost:9030/api/moveAndDelete?source-key=your_source_key&destination-key=your_destination_key&destination-bucket=your_destination_bucket'

Note – The application will operate on port 9030. Feel free to modify the port number by adjusting the server.port property in the application.properties file.

4. Conclusion

In conclusion, the process of renaming files and folders in Amazon S3 using a Spring Boot application is a vital and practical aspect of cloud-based file management. Leveraging the power of Spring Boot and Amazon S3, developers can seamlessly handle object renaming, enhancing the efficiency and organization of their cloud storage systems. By understanding the core concepts of Amazon S3, implementing proper error handling, and ensuring secure authentication and authorization mechanisms, developers can create robust and user-friendly applications that facilitate effortless file and folder renaming within Amazon S3 buckets. This approach not only streamlines data management but also ensures a seamless user experience, making it an essential capability for modern cloud-based applications. As technology continues to advance, the ability to rename files and folders in Amazon S3 using Spring Boot applications will remain a fundamental requirement for various industries, offering scalable and efficient solutions for cloud-based file management needs.

5. Download the Project

This tutorial served as a guide to exploring Amazon S3 functionality within a Spring Boot application.

You can download the full source code of this example here: How To Rename Files and Folders in Amazon S3