Lucene Standardanalyzer Example

In this Example, we are going to learn specifically about Lucene Standardanalyzer class. Here, we go through the simple and fundamental concepts with the Standardanalyzer Class. We went through different searching options and features that lucence facilitates through use the QueryParser class in my earlier post. This post aims to demonstrate you with implementation contexts for the Standard Analyzer.

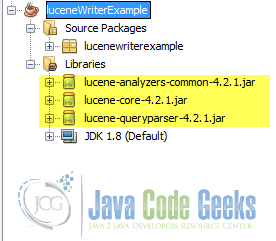

The code in this example is developed in the NetBeans IDE 8.0.2. In this example, I am continuing with the lucene version 4.2.1. You would better try this one with the latest versions always.

1. StandardAnalyzer Class

StandardAnalyzer Class is the basic class defined in Lucene Analyzer library. It is particularly specialized for toggling StandardTokenizer with StandardFilter, LowerCaseFilter and StopFilter, using a list of English stop words.This analyzer is the more sofisticated one as it can go for handling fields like email address, names, numbers etc.

Usage

StandardAnalyzer analyzer = new StandardAnalyzer(Version.LUCENE_42);

Note: You need to need to import “lucene-analyzers-common-4.2.1.jar” to use StandardAnalyzer.

2.Class Declaration

Class declaration is defined at org.apache.lucene.analysis.StandardAnalyzer as:

public final class StandardAnalyzer

extends StopwordAnalyzerBase

‘matchVersion’, ‘stopwords’ are Fields inherited from class org.apache.lucene.analysis.util.StopwordAnalyzerBase.

package org.apache.lucene.analysis.standard;

import java.io.IOException;

import java.io.Reader;

import org.apache.lucene.analysis.util.CharArraySet;

import org.apache.lucene.analysis.util.StopwordAnalyzerBase;

import org.apache.lucene.util.Version;

public final class StandardAnalyzer extends StopwordAnalyzerBase {

public static final int DEFAULT_MAX_TOKEN_LENGTH = 255;

private int maxTokenLength;

public static final CharArraySet STOP_WORDS_SET;

public StandardAnalyzer(Version matchVersion, CharArraySet stopWords) {

/**

.

.

.

*/

}

public StandardAnalyzer(Version matchVersion) {

/**

.

.

.

*/

}

public StandardAnalyzer(Version matchVersion, Reader stopwords) throws IOException {

/**

.

.

.

*/

}

public void setMaxTokenLength(int length) {

/**

.

.

.

*/

}

public int getMaxTokenLength() {

/**

.

.

.

*/

}

protected TokenStreamComponents createComponents(String fieldName, Reader reader) {

/**

.

.

.

*/

}

}

3.Whats a typical analyzer for?

A typical analyzer is meant for building TokenStreams to analyse text or data.Thus it includes contraints or rules for refering indexing fields. Tokenizer breaks down the stream of characters from the reader into raw Tokens. Finally, TokenFilters is implemented to perform the tokenizing. Some of Analyzer are KeywordAnalyzer, PerFieldAnalyzerWrapper, SimpleAnalyzer, StandardAnalyzer, StopAnalyzer, WhitespaceAnalyzer and so on. StandardAnalyzer is the more sophisticated analyzer of Lucene .

4.Whats the StandardTokenizer for?

public final class StandardTokenizer

extends Tokenizer

StandardTokenizer is a grammar-based tokenizer constructed with JFlex that:

- Splits words at punctuation characters, removing punctuation. However, a dot that’s not followed by whitespace is considered part of a token.

- Splits words at hyphens, unless there’s a number in the token, in which case the whole token is interpreted as a product number and is not split.

- Recognizes email addresses and internet hostnames as one token.

Many applications can have specific tokenizer requirements. If this tokenizer does not suit your scenarios, you would better consider copying this source code directory to your project and maintaining your own grammar-based tokenizer.

5.Constructors and Methods

5.1 Constructors

public StandardAnalyzer()Builds an analyzer with the default stop words (STOP_WORDS_SET).public StandardAnalyzer(CharArraySet stopWords)Builds an analyzer with the given stop words.public StandardAnalyzer(Reader stopwords)Builds an analyzer with the stop words from the given reader.

throws IOException

5.2 Some Methods

public void setMaxTokenLength(int length)Sets maximum allowable token length. If the length is exceeded then it is discarded. The setting only takes effect the next time tokenStream is called.public int getMaxTokenLength()Returns MaxTokenLength.protected Analyzer.TokenStreamComponents createComponents(String fieldName)Generates ParseException.

5.3 Fields

public static final int DEFAULT_MAX_TOKEN_LENGTHDefault maximum allowed token length.public static final CharArraySet STOP_WORDS_SETGet the next Token.

6. Things to consider

- You must specify the required Version compatibility when creating StandardAnalyzer.

- This should be a good tokenizer for most European-language documents.

- If this tokenizer does not suit your scenarios, you would better consider copying this source code directory to your project and maintaining your own grammar-based tokenizer.

7. Download the Netbeans project

You can download the full source code of the example here: Lucene Example Code